Hierarchical temporal memory

Hierarchical temporal memory (HTM) is a biologically constrained machine intelligence technology developed by Numenta.

Originally described in the 2004 book On Intelligence by Jeff Hawkins with Sandra Blakeslee, HTM is primarily used today for anomaly detection in streaming data.

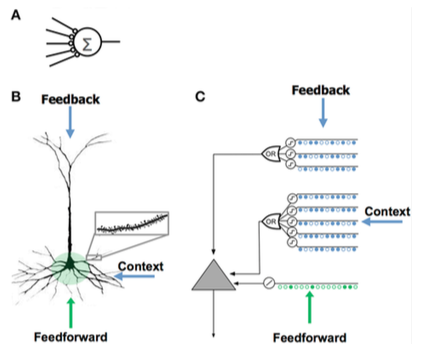

The technology is based on neuroscience and the physiology and interaction of pyramidal neurons in the neocortex of the mammalian (in particular, human) brain.

When applied to computers, HTM is well suited for prediction,[1] anomaly detection,[2] classification, and ultimately sensorimotor applications.

Each HTM region learns by identifying and memorizing spatial patterns—combinations of input bits that often occur at the same time.

Jeff Hawkins postulates that brains evolved this type of hierarchy to match, predict, and affect the organisation of the external world.

It relies on a data structure called sparse distributed representations (that is, a data structure whose elements are binary, 1 or 0, and whose number of 1 bits is small compared to the number of 0 bits) to represent the brain activity and a more biologically-realistic neuron model (often also referred to as cell, in the context of HTM).

An HTM layer creates a sparse distributed representation from its input, so that a fixed percentage of minicolumns are active at any one time[clarification needed].

Thus minicolumns are active over long periods of time, which leads to greater temporal stability seen by the parent layer.

Since predictions tend to change less frequently than the input patterns, this leads to increasing temporal stability of the output in higher hierarchy levels.

The following question was posed to Jeff Hawkins in September 2011 with regard to cortical learning algorithms: "How do you know if the changes you are making to the model are good or not?"

[13] HTM attempts to implement the functionality that is characteristic of a hierarchically related group of cortical regions in the neocortex.

Although it is primarily a functional model, several attempts have been made to relate the algorithms of the HTM with the structure of neuronal connections in the layers of neocortex.

A key to HTMs and the cortex's is their ability to deal with noise and variation in the input which is a result of using a "sparse distributive representation" where only about 2% of the columns are active at any given time.

Differences between HTMs and neurons include:[16] Integrating memory component with neural networks has a long history dating back to early research in distributed representations[17][18] and self-organizing maps.

Similar to SDM developed by NASA in the 80s[19] and vector space models used in Latent semantic analysis, HTM uses sparse distributed representations.

First, SDRs are tolerant of corruption and ambiguity due to the meaning of the representation being shared (distributed) across a small percentage (sparse) of active bits.

Likened to a Bayesian network, an HTM comprises a collection of nodes that are arranged in a tree-shaped hierarchy.

However, the analogy to Bayesian networks is limited, because HTMs can be self-trained (such that each node has an unambiguous family relationship), cope with time-sensitive data, and grant mechanisms for covert attention.

A theory of hierarchical cortical computation based on Bayesian belief propagation was proposed earlier by Tai Sing Lee and David Mumford.

[24] While HTM is mostly consistent with these ideas, it adds details about handling invariant representations in the visual cortex.

The tree-shaped hierarchy commonly used in HTMs resembles the usual topology of traditional neural networks.

For example, feedback from higher levels and motor control is not attempted because it is not yet understood how to incorporate them and binary instead of variable synapses are used because they were determined to be sufficient in the current HTM capabilities.

LAMINART and similar neural networks researched by Stephen Grossberg attempt to model both the infrastructure of the cortex and the behavior of neurons in a temporal framework to explain neurophysiological and psychophysical data.

[26] HTM is also related to work by Tomaso Poggio, including an approach for modeling the ventral stream of the visual cortex known as HMAX.