History of artificial intelligence

The recent AI boom, initiated by the development of transformer architecture, led to the rapid scaling and public releases of large language models (LLMs) like ChatGPT.

[13] In Faust: The Second Part of the Tragedy by Johann Wolfgang von Goethe, an alchemically fabricated homunculus, destined to live forever in the flask in which he was made, endeavors to be born into a full human body.

[18] Realistic humanoid automata were built by craftsman from many civilizations, including Yan Shi,[19] Hero of Alexandria,[20] Al-Jazari,[21] Haroun al-Rashid,[22] Jacques de Vaucanson,[23][24] Leonardo Torres y Quevedo,[25] Pierre Jaquet-Droz and Wolfgang von Kempelen.

[28][29] The faithful believed that craftsman had imbued these figures with very real minds, capable of wisdom and emotion—Hermes Trismegistus wrote that "by discovering the true nature of the gods, man has been able to reproduce it".

Calculating machines were designed or built in antiquity and throughout history by many people, including Gottfried Leibniz,[38][49] Joseph Marie Jacquard,[50] Charles Babbage,[50][51] Percy Ludgate,[52] Leonardo Torres Quevedo,[53] Vannevar Bush,[54] and others.

[55][56] The first modern computers were the massive machines of the Second World War (such as Konrad Zuse's Z3, Alan Turing's Heath Robinson and Colossus, Atanasoff and Berry's ABC and ENIAC at the University of Pennsylvania).

[e] The participants included Ray Solomonoff, Oliver Selfridge, Trenchard More, Arthur Samuel, Allen Newell and Herbert A. Simon, all of whom would create important programs during the first decades of AI research.

Miller wrote "I left the symposium with a conviction, more intuitive than rational, that experimental psychology, theoretical linguistics, and the computer simulation of cognitive processes were all pieces from a larger whole.

The cognitive approach allowed researchers to consider "mental objects" like thoughts, plans, goals, facts or memories, often analyzed using high level symbols in functional networks.

The programs developed in the years after the Dartmouth Workshop were, to most people, simply "astonishing":[i] computers were solving algebra word problems, proving theorems in geometry and learning to speak English.

[110] This paradigm led to innovative work in machine vision by Gerald Sussman, Adolfo Guzman, David Waltz (who invented "constraint propagation"), and especially Patrick Winston.

[115][116] A group at Stanford Research Institute led by Charles A. Rosen and Alfred E. (Ted) Brain built two neural network machines named MINOS I (1960) and II (1963), mainly funded by U.S. Army Signal Corps.

[144] General public interest in the field continued to grow,[143] the number of researchers increased dramatically,[143] and new ideas were explored in logic programming, commonsense reasoning and many other areas.

[164] Hubert Dreyfus ridiculed the broken promises of the 1960s and critiqued the assumptions of AI, arguing that human reasoning actually involved very little "symbol processing" and a great deal of embodied, instinctive, unconscious "know how".

MIT chose instead to focus on writing programs that solved a given task without using high-level abstract definitions or general theories of cognition, and measured performance by iterative testing, rather than arguments from first principles.

"AI researchers were beginning to suspect—reluctantly, for it violated the scientific canon of parsimony—that intelligence might very well be based on the ability to use large amounts of diverse knowledge in different ways,"[197] writes Pamela McCorduck.

[200] Although symbolic knowledge representation and logical reasoning produced useful applications in the 80s and received massive amounts of funding, it was still unable to solve problems in perception, robotics, learning and common sense.

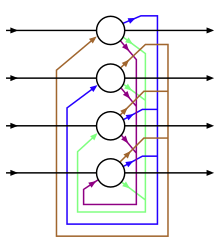

[125][202] Neural networks, along with several other similar models, received widespread attention after the 1986 publication of the Parallel Distributed Processing, a two volume collection of papers edited by Rumelhart and psychologist James McClelland.

[211][212] Judea Pearl's Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference, an influential 1988 book[213] brought probability and decision theory into AI.

The term "AI winter" was coined by researchers who had survived the funding cuts of 1974 when they became concerned that enthusiasm for expert systems had spiraled out of control and that disappointment would certainly follow.

The shared mathematical language allowed both a higher level of collaboration with more established and successful fields and the achievement of results which were measurable and provable; AI had become a more rigorous "scientific" discipline.

[203] In 2012, AlexNet, a deep learning model,[am] developed by Alex Krizhevsky, won the ImageNet Large Scale Visual Recognition Challenge, with significantly fewer errors than the second-place winner.

[aq] In 2016, the election of Donald Trump and the controversy over the COMPAS system illuminated several problems with the current technological infrastructure, including misinformation, social media algorithms designed to maximize engagement, the misuse of personal data and the trustworthiness of predictive models.

[282] In 2023, Microsoft Research tested the model with a large variety of tasks, and concluded that "it could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system".

[296] The free web application demonstrated the ability to clone character voices using neural networks with minimal training data, requiring as little as 15 seconds of audio to reproduce a voice—a capability later corroborated by OpenAI in 2024.

OpenAI’s recent statement regarding artificial general intelligence, states that "At some point, it may be important to get independent review before starting to train future systems, and for the most advanced efforts to agree to limit the rate of growth of compute used for creating new models."

[302] The chatbot's ability to engage in human-like conversations, write code, and generate creative content captured public imagination and led to rapid adoption across various sectors including education, business, and research.

In March 2023, over 20,000 signatories, including computer scientist Yoshua Bengio, Elon Musk, and Apple co-founder Steve Wozniak, signed an open letter calling for a pause in advanced AI development, citing "profound risks to society and humanity.

[307] Similarly, Jeffrey Gundlach, CEO of DoubleLine Capital, explicitly compared the AI boom to the dot-com bubble of the late 1990s, suggesting that investor enthusiasm might be outpacing realistic near-term capabilities and revenue potential.

OpenAI's announcement stated that "Self-hosting ChatGPT Gov enables agencies to more easily manage their own security, privacy, and compliance requirements, such as stringent cybersecurity frameworks (IL5, CJIS, ITAR, FedRAMP High).