History of entropy

In the history of physics, the concept of entropy developed in response to the observation that a certain amount of functional energy released from combustion reactions is always lost to dissipation or friction and is thus not transformed into useful work.

[citation needed] Over the next two centuries, physicists investigated this puzzle of lost energy; the result was the concept of entropy.

Over the next three decades, Carnot's theorem was taken as a statement that in any machine the accelerations and shocks of the moving parts all represent losses of moment of activity, i.e. the useful work done.

This loss of moment of activity was the first-ever rudimentary statement of the second law of thermodynamics and the concept of 'transformation-energy' or entropy, i.e. energy lost to dissipation and friction.

During the following year his son Sadi Carnot, having graduated from the École Polytechnique training school for engineers, but now living on half-pay with his brother Hippolyte in a small apartment in Paris, wrote Reflections on the Motive Power of Fire.

He then discusses the three categories into which heat Q may be divided: Building on this logic, and following a mathematical presentation of the first fundamental theorem, Clausius then presented the first-ever mathematical formulation of entropy, although at this point in the development of his theories he called it "equivalence-value", perhaps referring to the concept of the mechanical equivalent of heat which was developing at the time rather than entropy, a term which was to come into use later.

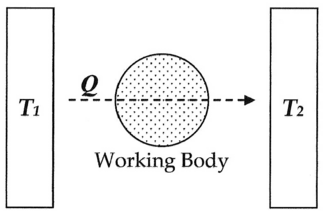

wherein T is a function of the temperature, independent of the nature of the process by which the transformation is effected.In modern terminology, that is, the terminology introduced by Clausius himself in 1865, we think of this equivalence-value as "entropy", symbolized by S. Thus, using the above description, we can calculate the entropy change ΔS for the passage of the quantity of heat Q from the temperature T1, through the "working body" of fluid, which was typically a body of steam, to the temperature T2 as shown below: If we make the assignment: Then, the entropy change or "equivalence-value" for this transformation is: which equals: and by factoring out Q, we have the following form, as was derived by Clausius: In 1856, Clausius stated what he called the "second fundamental theorem in the mechanical theory of heat" in the following form: where N is the "equivalence-value" of all uncompensated transformations involved in a cyclical process.

In 1865, Clausius gave irreversible heat loss, or what he had previously been calling "equivalence-value", a name:[5][6][7] I propose that S be taken from the Greek words, 'en-tropie' [intrinsic direction].

He postulated a local decrease of entropy for living systems when (1/D) represents the number of states that are prevented from randomly distributing, such as occurs in replication of the genetic code.

It has been proposed as an explanation of localized decrease of entropy[13] in radiant energy focusing in parabolic reflectors and during dark current in diodes, which would otherwise be in violation of statistical mechanics.

In 1948, while working at Bell Telephone Laboratories, electrical engineer Claude Shannon set out to mathematically quantify the statistical nature of "lost information" in phone-line signals.

Then, as an example of how this expression applies in a number of different fields, he references Richard C. Tolman's 1938 Principles of Statistical Mechanics, stating that the form of H will be recognized as that of entropy as defined in certain formulations of statistical mechanics where pi is the probability of a system being in cell i of its phase space ... H is then, for example, the H in Boltzmann's famous H theorem.As such, over the last fifty years, ever since this statement was made, people have been overlapping the two concepts or even stating that they are exactly the same.

One example is the concept of corporate entropy as put forward somewhat humorously by authors Tom DeMarco and Timothy Lister in their 1987 classic publication Peopleware, a book on growing and managing productive teams and successful software projects.