Intel 8080

[3] Although earlier microprocessors were commonly used in mass-produced devices such as calculators, cash registers, computer terminals, industrial robots,[4] and other applications, the 8080 saw greater success in a wider set of applications, and is largely credited with starting the microcomputer industry.

[5] Several factors contributed to its popularity: its 40-pin package made it easier to interface than the 18-pin 8008, and also made its data bus more efficient; its NMOS implementation gave it faster transistors than those of the P-type metal–oxide–semiconductor logic (PMOS) 8008, while also simplifying interfacing by making it TTL-compatible; a wider variety of support chips were available; its instruction set was enhanced over the 8008;[6] and its full 16-bit address bus (versus the 14-bit one of the 8008) enabled it to access 64 KB of memory, four times more than the 8008's range of 16 KB.

It was used in the Altair 8800 and subsequent S-100 bus personal computers until it was replaced by the Z80 in this role, and was the original target CPU for CP/M operating systems developed by Gary Kildall.

The 8080 is implemented in N-type metal–oxide–semiconductor logic (NMOS) using non-saturated enhancement mode transistors as loads[9][10] thus demanding a +12 V and a −5 V voltage in addition to the main transistor–transistor logic (TTL) compatible +5 V. Microprocessor customers were reluctant to adopt the 8008 because of limitations such as the single addressing mode, low clock speed, low pin count, and small on-chip stack, which restricted the scale and complexity of software.

There were several proposed designs for the 8080, ranging from simply adding stack instructions to the 8008 to a complete departure from all previous Intel architectures.

After rumors about the "CPU on a chip" came out, Intel started to see interest in the microprocessor from all sorts of customers.

At the same time, Federico Faggin – who led the design of the 4004 and became the primary architect of the 8080 – was giving some technical seminars on both of the aforementioned microprocessors and visiting customers.

"[11] Faggin later proposed the chip to Intel's management and pushed for its implementation in the spring of 1972, as development of the 8008 was wrapping up.

It was decided early in development that the 8080 was not to be binary-compatible with the 8008, instead opting for source compatibility once run through a transpiler, to allow new software to not be subject to the same restrictions as the 8008.

For the same reason, as well as to expand the capabilities of stack-based routines and interrupts, the stack was moved to external memory.

It had a flaw, in that driving with standard TTL devices increased the ground voltage because high current flowed into the narrow line.

The processor maintains internal flag bits (a status register), which indicate the results of arithmetic and logical instructions.

As with many other 8-bit processors, all instructions are encoded in one byte (including register numbers, but excluding immediate data), for simplicity.

These are intended to be supplied by external hardware in order to invoke a corresponding interrupt service routine, but are also often employed as fast system calls.

The instruction that executes slowest is XTHL, which is used for exchanging the register pair HL with the value stored at the address indicated by the stack pointer.

Many CPU architectures instead use so-called memory-mapped I/O (MMIO), in which a common address space is used for both RAM and peripheral chips.

This removes the need for dedicated I/O instructions, although a drawback in such designs may be that special hardware must be used to insert wait states, as peripherals are often slower than memory.

Similar I/O-port schemes are used in the backward-compatible Zilog Z80 and Intel 8085, and the closely related x86 microprocessor families.

For more advanced systems, during the beginning of each machine cycle, the processor places an eight bit status word on the data bus.

For simple systems, where the interrupts are not used, it is possible to find cases where this pin is used as an additional single-bit output port (the popular Radio-86RK computer made in the Soviet Union, for instance).

The following 8080/8085 assembler source code is for a subroutine named memcpy that copies a block of data bytes of a given size from one location to another.

Using the two additional pins (read and write signals), it is possible to assemble simple microprocessor devices very easily.

Only the separate IO space, interrupts, and DMA need added chips to decode the processor pin signals.

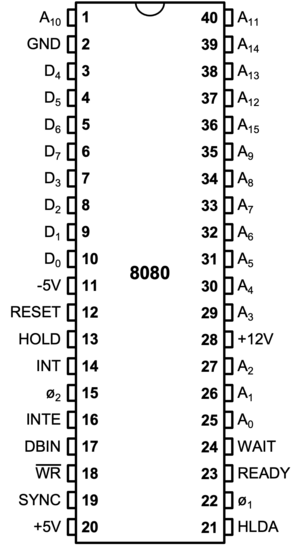

The pin-out table, from the chip's accompanying documentation, describes the pins as follows: A key factor in the success of the 8080 was the broad range of support chips available, providing serial communications, counter/timing, input/output, direct memory access, and programmable interrupt control amongst other functions: The 8080 integrated circuit uses non-saturated enhancement-load nMOS gates, demanding extra voltages (for the load-gate bias).

The 8080 was used in many early microcomputers, such as the MITS Altair 8800 Computer, Processor Technology SOL-20 Terminal Computer and IMSAI 8080 Microcomputer, forming the basis for machines running the CP/M operating system (the later, almost fully compatible and more able, Zilog Z80 processor would capitalize on this, with Z80 and CP/M becoming the dominant CPU and OS combination of the period c. 1976 to 1983 much as did the x86 and DOS for the PC a decade later).

The Auto-COM instruments also include an entire automated film cutting, processing, washing, and drying sub-system.

[36] This design, in turn, later spawned the x86 family of chips, which continue to be Intel's primary line of processors.