Intelligent agent

Intelligent agents are also closely related to software agents—autonomous computer programs that carry out tasks on behalf of users.

For instance, the influential textbook Artificial Intelligence: A Modern Approach (Russell & Norvig) describes: Other researchers and definitions build upon this foundation.

Padgham & Winikoff emphasize that intelligent agents should react to changes in their environment in a timely way, proactively pursue goals, and be flexible and robust (able to handle unexpected situations).

Some also suggest that ideal agents should be "rational" in the economic sense (making optimal choices) and capable of complex reasoning, like having beliefs, desires, and intentions (BDI model).

An agent is deemed more intelligent if it consistently selects actions that yield outcomes better aligned with its objective function.

As a further extension, mimicry-driven systems can be framed as agents optimizing a "goal function" based on how closely the IA mimics the desired behavior.

[9] While symbolic AI systems often use an explicit goal function, the paradigm also applies to neural networks and evolutionary computing.

Reinforcement learning can generate intelligent agents that appear to act in ways intended to maximize a "reward function".

For example, a self-driving car's percepts might include camera images, lidar data, GPS coordinates, and speed readings at a specific instant.

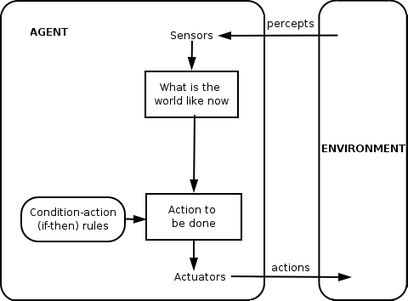

A model-based reflex agent should maintain some sort of internal model that depends on the percept history and thereby reflects at least some of the unobserved aspects of the current state.

Search and planning are the subfields of artificial intelligence devoted to finding action sequences that achieve the agent's goals.

A utility-based agent has to model and keep track of its environment, tasks that have involved a great deal of research on perception, representation, reasoning, and learning.

These sub-agents handle lower-level functions, and together with the main agent, they form a complete system capable of executing complex tasks and achieving challenging goals.

The perception system gathers input from the environment via the sensors and feeds this information to a central controller, which then issues commands to the actuators.

Often, a multilayered hierarchy of controllers is necessary to balance the rapid responses required for low-level tasks with the more deliberative reasoning needed for high-level objectives.

They possess several key attributes, including complex goal structures, natural language interfaces, the capacity to act independently of user supervision, and the integration of software tools or planning systems.

[26] A common application of AI agents is the automation of tasks—for example, booking travel plans based on a user's prompted request.

[29] Frameworks for building AI agents include LangChain,[30] as well as tools such as CAMEL,[31][32] Microsoft AutoGen,[33] and OpenAI Swarm.

[34] Proponents argue that AI agents can increase personal and economic productivity,[35][28] foster greater innovation,[36] and liberate users from monotonous tasks.

[38][27][39][40][41] Additional challenges include weakened human oversight,[38][35][27][28][39] algorithmic bias,[38][42] and compounding software errors,[27][29] as well as issues related to the explainability of agent decisions,[27][39] security vulnerabilities,[27][28][40][42] problems with underemployment,[42] job displacement,[28][42] and the potential for user manipulation.

[39][43] They may also complicate legal frameworks, foster hallucinations, hinder countermeasures against rogue agents, and suffer from the lack of standardized evaluation methods.