Reinforcement learning

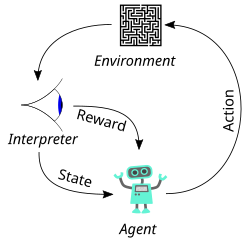

Instead, the focus is on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge) with the goal of maximizing the cumulative reward (the feedback of which might be incomplete or delayed).

The environment is typically stated in the form of a Markov decision process (MDP), as many reinforcement learning algorithms use dynamic programming techniques.

[2] The main difference between classical dynamic programming methods and reinforcement learning algorithms is that the latter do not assume knowledge of an exact mathematical model of the Markov decision process, and they target large MDPs where exact methods become infeasible.

Thus, reinforcement learning is particularly well-suited to problems that include a long-term versus short-term reward trade-off.

It has been applied successfully to various problems, including energy storage,[6] robot control,[7] photovoltaic generators,[8] backgammon, checkers,[9] Go (AlphaGo), and autonomous driving systems.

[10] Two elements make reinforcement learning powerful: the use of samples to optimize performance, and the use of function approximation to deal with large environments.

The exploration vs. exploitation trade-off has been most thoroughly studied through the multi-armed bandit problem and for finite state space Markov decision processes in Burnetas and Katehakis (1997).

[12] Reinforcement learning requires clever exploration mechanisms; randomly selecting actions, without reference to an estimated probability distribution, shows poor performance.

is usually a fixed parameter but can be adjusted either according to a schedule (making the agent explore progressively less), or adaptively based on heuristics.

[13] Even if the issue of exploration is disregarded and even if the state was observable (assumed hereafter), the problem remains to use past experience to find out which actions lead to higher cumulative rewards.

From the theory of Markov decision processes it is known that, without loss of generality, the search can be restricted to the set of so-called stationary policies.

A policy is stationary if the action-distribution returned by it depends only on the last state visited (from the observation agent's history).

The brute force approach entails two steps: One problem with this is that the number of policies can be large, or even infinite.

These problems can be ameliorated if we assume some structure and allow samples generated from one policy to influence the estimates made for others.

Monte Carlo methods[15] are used to solve reinforcement learning problems by averaging sample returns.

Learning from actual experience does not require prior knowledge of the environment and can still lead to optimal behavior.

To address this non-stationarity, Monte Carlo methods use the framework of general policy iteration (GPI).

This may also help to some extent with the third problem, although a better solution when returns have high variance is Sutton's temporal difference (TD) methods that are based on the recursive Bellman equation.

[19] Including Deep Q-learning methods when a neural network is used to represent Q, with various applications in stochastic search problems.

[25] Such methods can sometimes be extended to use of non-parametric models, such as when the transitions are simply stored and "replayed" to the learning algorithm.

[26] Model-based methods can be more computationally intensive than model-free approaches, and their utility can be limited by the extent to which the Markov decision process can be learnt.

[clarification needed] Temporal-difference-based algorithms converge under a wider set of conditions than was previously possible (for example, when used with arbitrary, smooth function approximation).

[46] This approach extends reinforcement learning by using a deep neural network and without explicitly designing the state space.

In this research area some studies initially showed that reinforcement learning policies are susceptible to imperceptible adversarial manipulations.

[56] MaxEnt IRL estimates the parameters of a linear model of the reward function by maximizing the entropy of the probability distribution of observed trajectories subject to constraints related to matching expected feature counts.

Recently it has been shown that MaxEnt IRL is a particular case of a more general framework named random utility inverse reinforcement learning (RU-IRL).

[62][63] However, CVaR optimization in risk-averse RL requires special care, to prevent gradient bias[64] and blindness to success.

such that in each iteration executes the following machine learning routine: Initial conditions of the memory are received as input from the genetic environment.

[66][67] The CAA computes, in a crossbar fashion, both decisions about actions and emotions (feelings) about consequence states.

[69] After the training is finished, the agents can be run on a sample of test episodes, and their scores (returns) can be compared.