Maximum subarray problem

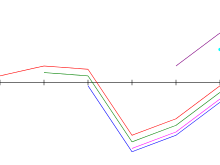

In computer science, the maximum sum subarray problem, also known as the maximum segment sum problem, is the task of finding a contiguous subarray with the largest sum, within a given one-dimensional array A[1...n] of numbers.

Each number in the input array A could be positive, negative, or zero.

Some properties of this problem are: Although this problem can be solved using several different algorithmic techniques, including brute force,[2] divide and conquer,[3] dynamic programming,[4] and reduction to shortest paths, a simple single-pass algorithm known as Kadane's algorithm solves it efficiently.

The maximum subarray problem was proposed by Ulf Grenander in 1977 as a simplified model for maximum likelihood estimation of patterns in digitized images.

[5] Grenander was looking to find a rectangular subarray with maximum sum, in a two-dimensional array of real numbers.

A brute-force algorithm for the two-dimensional problem runs in O(n6) time; because this was prohibitively slow, Grenander proposed the one-dimensional problem to gain insight into its structure.

Grenander derived an algorithm that solves the one-dimensional problem in O(n2) time,[note 1] improving the brute force running time of O(n3).

When Michael Shamos heard about the problem, he overnight devised an O(n log n) divide-and-conquer algorithm for it.

Soon after, Shamos described the one-dimensional problem and its history at a Carnegie Mellon University seminar attended by Jay Kadane, who designed within a minute an O(n)-time algorithm,[5][6][7] which is as fast as possible.

[note 2] In 1982, David Gries obtained the same O(n)-time algorithm by applying Dijkstra's "standard strategy";[8] in 1989, Richard Bird derived it by purely algebraic manipulation of the brute-force algorithm using the Bird–Meertens formalism.

[9] Grenander's two-dimensional generalization can be solved in O(n3) time either by using Kadane's algorithm as a subroutine, or through a divide-and-conquer approach.

Slightly faster algorithms based on distance matrix multiplication have been proposed by Tamaki & Tokuyama (1998) and by Takaoka (2002).

There is some evidence that no significantly faster algorithm exists; an algorithm that solves the two-dimensional maximum subarray problem in O(n3−ε) time, for any ε>0, would imply a similarly fast algorithm for the all-pairs shortest paths problem.

[10] Maximum subarray problems arise in many fields, such as genomic sequence analysis and computer vision.

Genomic sequence analysis employs maximum subarray algorithms to identify important biological segments of protein sequences that have unusual properties, by assigning scores to points within the sequence that are positive when a motif to be recognized is present, and negative when it is not, and then seeking the maximum subarray among these scores.

These problems include conserved segments, GC-rich regions, tandem repeats, low-complexity filter, DNA binding domains, and regions of high charge.

[11] In computer vision, bitmap images generally consist only of positive values, for which the maximum subarray problem is trivial: the result is always the whole array.

th step, it computes the subarray with the largest sum ending at

[note 3] Moreover, it computes the subarray with the largest sum anywhere in

, maintained in variable best_sum,[note 4] and easily obtained as the maximum of all values of current_sum seen so far, cf.

If the input contains no positive element, the returned value is that of the largest element (i.e., the value closest to 0), or negative infinity if the input was empty.

If the array is nonempty, its first element could be used in place of negative infinity, if needed to avoid mixing numeric and non-numeric values.

This algorithm calculates the maximum subarray ending at each position from the maximum subarray ending at the previous position, so it can be viewed as a case of dynamic programming.

Kadane's original algorithm solves the problem variant when empty subarrays are admitted.

It is obtained by two changes in code: in line 3, best_sum should be initialized to 0 to account for the empty subarray

The algorithm can be modified to keep track of the starting and ending indices of the maximum subarray as well.

Because of the way this algorithm uses optimal substructures (the maximum subarray ending at each position is calculated in a simple way from a related but smaller and overlapping subproblem: the maximum subarray ending at the previous position) this algorithm can be viewed as a simple/trivial example of dynamic programming.

[4][7] Similar problems may be posed for higher-dimensional arrays, but their solutions are more complicated; see, e.g., Takaoka (2002).

Brodal & Jørgensen (2007) showed how to find the k largest subarray sums in a one-dimensional array, in the optimal time bound

The Maximum sum k-disjoint subarrays can also be computed in the optimal time bound