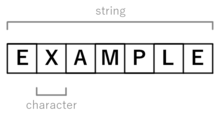

Character (computing)

[1] Examples of characters include letters, numerical digits, common punctuation marks (such as "."

The concept also includes control characters, which do not correspond to visible symbols but rather to instructions to format or process the text.

Examples of control characters include carriage return and tab as well as other instructions to printers or other devices that display or otherwise process text.

All modern systems use a varying-size sequence of these fixed-sized pieces, for instance UTF-8 uses a varying number of 8-bit code units to define a "code point" and Unicode uses varying number of those to define a "character".

Many computer fonts consist of glyphs that are indexed by the numerical code of the corresponding character.

Unicode's definition supplements this with explanatory notes that encourage the reader to differentiate between characters, graphemes, and glyphs, among other things.

A char in the C programming language is a data type with the size of exactly one byte,[6][7] which in turn is defined to be large enough to contain any member of the "basic execution character set".

[9] This will not fit in a char on most systems, so more than one is used for some of them, as in the variable-length encoding UTF-8 where each code point takes 1 to 4 bytes.

Modern POSIX documentation attempts to fix this, defining "character" as a sequence of one or more bytes representing a single graphic symbol or control code, and attempts to use "byte" when referring to char data.