Linked list

In computer science, a linked list is a linear collection of data elements whose order is not given by their physical placement in memory.

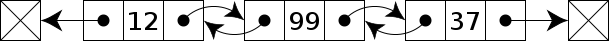

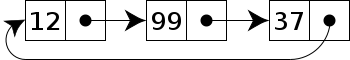

More complex variants add additional links, allowing more efficient insertion or removal of nodes at arbitrary positions.

The principal benefit of a linked list over a conventional array is that the list elements can be easily inserted or removed without reallocation or reorganization of the entire structure because the data items do not need to be stored contiguously in memory or on disk, while restructuring an array at run-time is a much more expensive operation.

Linked lists were developed in 1955–1956, by Allen Newell, Cliff Shaw and Herbert A. Simon at RAND Corporation and Carnegie Mellon University as the primary data structure for their Information Processing Language (IPL).

Newell and Simon were recognized with the ACM Turing Award in 1975 for having "made basic contributions to artificial intelligence, the psychology of human cognition, and list processing".

[1] LISP, standing for list processor, was created by John McCarthy in 1958 while he was at MIT and in 1960 he published its design in a paper in the Communications of the ACM, entitled "Recursive Functions of Symbolic Expressions and Their Computation by Machine, Part I".

By the early 1960s, the utility of both linked lists and languages which use these structures as their primary data representation was well established.

Bert Green of the MIT Lincoln Laboratory published a review article entitled "Computer languages for symbol manipulation" in IRE Transactions on Human Factors in Electronics in March 1961 which summarized the advantages of the linked list approach.

A later review article, "A Comparison of list-processing computer languages" by Bobrow and Raphael, appeared in Communications of the ACM in April 1964.

A variant developed by TSC for and marketed by Smoke Signal Broadcasting in California, used doubly linked lists in the same manner.

Many modern operating systems use doubly linked lists to maintain references to active processes, threads, and other dynamic objects.

This convention simplifies and accelerates some list-handling algorithms, by ensuring that all links can be safely dereferenced and that every list (even one that contains no data elements) always has a "first" and "last" node.

If the space reserved for the dynamic array is exceeded, it is reallocated and (possibly) copied, which is an expensive operation.

While one can "delete" an element from an array in constant time by somehow marking its slot as "vacant", this causes fragmentation that impedes the performance of iteration.

This helps with appending elements at the array's end, but inserting into (or removing from) middle positions still carries prohibitive costs due to data moving to maintain contiguity.

A good example that highlights the pros and cons of using dynamic arrays vs. linked lists is by implementing a program that resolves the Josephus problem.

Although trivial for a conventional computer, solving this problem by a parallel algorithm is complicated and has been the subject of much research.

However, insertion and deletion operations are more expensive due to the overhead of tree manipulations to maintain balance.

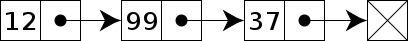

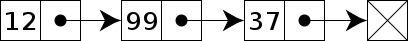

A singly linked linear list is a recursive data structure, because it contains a pointer to a smaller object of the same type.

By contrast, the use of null to denote an empty linear list is more natural and often creates fewer special cases.

For example, when scanning the list looking for a node with a given value x, setting the sentinel's data field to x makes it unnecessary to test for end-of-list inside the loop.

This section gives pseudocode for adding or removing nodes from singly, doubly, and circularly linked lists in-place.

Languages that do not support any type of reference can still create links by replacing pointers with array indices.

This leads to the following issues: For these reasons, this approach is mainly used for languages that do not support dynamic memory allocation.

In general, if a set of data structures needs to be included in linked lists, external storage is the best approach.

Another approach that can be used with some languages involves having different data structures, but all have the initial fields, including the next (and prev if double linked list) references in the same location.

As long as the number of families that a member can belong to is known at compile time, internal storage works fine.

Finding a specific element in a linked list, even if it is sorted, normally requires O(n) time (linear search).

[7] One possible implementation is a skew binary random-access list using the skew binary number system, which involves a list of trees with special properties; this allows worst-case constant time head/cons operations, and worst-case logarithmic time random access to an element by index.

[7] Both stacks and queues are often implemented using linked lists, and simply restrict the type of operations which are supported.