Meta-analysis

Meta-analysis is a method of synthesis of quantitative data from multiple independent studies addressing a common research question.

By combining these effect sizes the statistical power is improved and can resolve uncertainties or discrepancies found in individual studies.

Meta-analyses are integral in supporting research grant proposals, shaping treatment guidelines, and influencing health policies.

They are also pivotal in summarizing existing research to guide future studies, thereby cementing their role as a fundamental methodology in metascience.

[4] While Glass is credited with authoring the first modern meta-analysis, a paper published in 1904 by the statistician Karl Pearson in the British Medical Journal[5] collated data from several studies of typhoid inoculation and is seen as the first time a meta-analytic approach was used to aggregate the outcomes of multiple clinical studies.

The first example of this was by Hans Eysenck who in a 1978 article in response to the work done by Mary Lee Smith and Gene Glass called meta-analysis an "exercise in mega-silliness".

[36] There are more than 80 tools available to assess the quality and risk of bias in observational studies reflecting the diversity of research approaches between fields.

Other quality measures that may be more relevant for correlational studies include sample size, psychometric properties, and reporting of methods.

On the other hand, indirect aggregate data measures the effect of two treatments that were each compared against a similar control group in a meta-analysis.

Although it is conventionally believed that one-stage and two-stage methods yield similar results, recent studies have shown that they may occasionally lead to different conclusions.

[57] Most importantly, the fixed effects model assumes that all included studies investigate the same population, use the same variable and outcome definitions, etc.

[64] Another issue with the random effects model is that the most commonly used confidence intervals generally do not retain their coverage probability above the specified nominal level and thus substantially underestimate the statistical error and are potentially overconfident in their conclusions.

Thus it appears that in small meta-analyses, an incorrect zero between study variance estimate is obtained, leading to a false homogeneity assumption.

[83] Indirect comparison meta-analysis methods (also called network meta-analyses, in particular when multiple treatments are assessed simultaneously) generally use two main methodologies.

The alternative methodology uses complex statistical modelling to include the multiple arm trials and comparisons simultaneously between all competing treatments.

To complicate matters further, because of the nature of MCMC estimation, overdispersed starting values have to be chosen for a number of independent chains so that convergence can be assessed.

Although the complexity of the Bayesian approach limits usage of this methodology, recent tutorial papers are trying to increase accessibility of the methods.

Great claims are sometimes made for the inherent ability of the Bayesian framework to handle network meta-analysis and its greater flexibility.

[106] More recently, and under the influence of a push for open practices in science, tools to develop "crowd-sourced" living meta-analyses that are updated by communities of scientists [107][108] in hopes of making all the subjective choices more explicit.

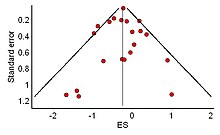

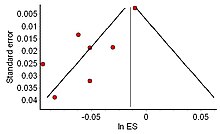

In contrast, when there is no publication bias, the effect of the smaller studies has no reason to be skewed to one side and so a symmetric funnel plot results.

However, small study effects may be just as problematic for the interpretation of meta-analyses, and the imperative is on meta-analytic authors to investigate potential sources of bias.

"[129] For example, in 1998, a US federal judge found that the United States Environmental Protection Agency had abused the meta-analysis process to produce a study claiming cancer risks to non-smokers from environmental tobacco smoke (ETS) with the intent to influence policy makers to pass smoke-free–workplace laws.

Vice versa, results from meta-analyses may also make certain hypothesis or interventions seem nonviable and preempt further research or approvals, despite certain modifications – such as intermittent administration, personalized criteria and combination measures – leading to substantially different results, including in cases where such have been successfully identified and applied in small-scale studies that were considered in the meta-analysis.

In large clinical trials, planned, sequential analyses are sometimes used if there is considerable expense or potential harm associated with testing participants.

[135] In applied behavioural science, "megastudies" have been proposed to investigate the efficacy of many different interventions designed in an interdisciplinary manner by separate teams.

For example, studies that include small samples or researcher-made measures lead to inflated effect size estimates.

[138][139] Modern statistical meta-analysis does more than just combine the effect sizes of a set of studies using a weighted average.

[148] Meta-analysis of whole genome sequencing studies provides an attractive solution to the problem of collecting large sample sizes for discovering rare variants associated with complex phenotypes.

Some methods have been developed to enable functionally informed rare variant association meta-analysis in biobank-scale cohorts using efficient approaches for summary statistic storage.

This allows researchers to examine patterns in the fuller panorama of more accurately estimated results and draw conclusions that consider the broader context (e.g., how personality-intelligence relations vary by trait family).