Metadata

In many countries, government organizations routinely store metadata about emails, telephone calls, web pages, video traffic, IP connections, and cell phone locations.

Slate reported in 2013 that the United States government's interpretation of "metadata" could be broad, and might include message content such as the subject lines of emails.

[22] Descriptive metadata is typically used for discovery and identification, as information to search and locate an object, such as title, authors, subjects, keywords, and publisher.

While the efforts to describe and standardize the varied accessibility needs of information seekers are beginning to become more robust, their adoption into established metadata schemas has not been as developed.

There are many sources of these vocabularies, both meta and master data: UML, EDIFACT, XSD, Dewey/UDC/LoC, SKOS, ISO-25964, Pantone, Linnaean Binomial Nomenclature, etc.

The process indexes pages and then matches text strings using its complex algorithm; there is no intelligence or "inferencing" occurring, just the illusion thereof.

Metadata with a high granularity allows for deeper, more detailed, and more structured information and enables a greater level of technical manipulation.

While this standard describes itself originally as a "data element" registry, its purpose is to support describing and registering metadata content independently of any particular application, lending the descriptions to being discovered and reused by humans or computers in developing new applications, databases, or for analysis of data collected in accordance with the registered metadata content.

One advocate of microformats, Tantek Çelik, characterized a problem with alternative approaches: Here's a new language we want you to learn, and now you need to output these additional files on your server.

Entities such as Eurostat,[51] European System of Central Banks,[51] and the U.S. Environmental Protection Agency[52] have implemented these and other such standards and guidelines with the goal of improving "efficiency when managing statistical business processes".

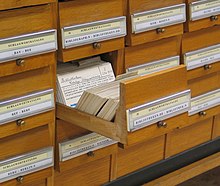

Such data helps classify, aggregate, identify, and locate a particular book, DVD, magazine, or any object a library might hold in its collection.

While often based on library principles, the focus on non-librarian use, especially in providing metadata, means they do not follow traditional or common cataloging approaches.

Standards for metadata in digital libraries include Dublin Core, METS, MODS, DDI, DOI, URN, PREMIS schema, EML, and OAI-PMH.

The data contained within manuscripts or accompanying them as supplementary material is less often subject to metadata creation,[59][60] though they may be submitted to e.g. biomedical databases after publication.

[66][67] Moreover, various metadata about scientific outputs can be created or complemented – for instance, some organizations attempt to track and link citations of papers as 'Supporting', 'Mentioning' or 'Contrasting' the study.

The early stages of standardization in archiving, description and cataloging within the museum community began in the late 1990s with the development of standards such as Categories for the Description of Works of Art (CDWA), Spectrum, CIDOC Conceptual Reference Model (CRM), Cataloging Cultural Objects (CCO) and the CDWA Lite XML schema.

[74] The Anglo-American Cataloguing Rules (AACR), originally developed for characterizing books, have also been applied to cultural objects, works of art and architecture.

[73][75] Additionally, museums often employ standardized commercial collection management software that prescribes and limits the ways in which archivists can describe artworks and cultural objects.

[75] Museums are encouraged to use controlled vocabularies that are contextual and relevant to their collections and enhance the functionality of their digital information systems.

Using metadata removal tools to "clean" or redact documents can mitigate the risks of unwittingly sending sensitive data.

[81] This new law means that both security and policing agencies will be allowed to access up to 2 years of an individual's metadata, with the aim of making it easier to stop any terrorist attacks and serious crimes from happening.

Legislative metadata has been the subject of some discussion in law.gov forums such as workshops held by the Legal Information Institute at the Cornell Law School on 22 and 23 March 2010.

[82] A handful of key points have been outlined by these discussions, section headings of which are listed as follows: Australian medical research pioneered the definition of metadata for applications in health care.

When media has identifiers set or when such can be generated, information such as file tags and descriptions can be pulled or scraped from the Internet – for example about movies.

Most major broadcast sporting events like FIFA World Cup or the Olympic Games use this metadata to distribute their video content to TV stations through keywords.

[100][101] Metadata that describes geographic objects in electronic storage or format (such as datasets, maps, features, or documents with a geospatial component) has a history dating back to at least 1994.

As a result, almost all digital audio formats, including mp3, broadcast wav, and AIFF files, have similar standardized locations that can be populated with metadata.

Common editors such as TagLib support MP3, Ogg Vorbis, FLAC, MPC, Speex, WavPack TrueAudio, WAV, AIFF, MP4, and ASF file formats.

[110] One of the first satirical examinations of the concept of Metadata as we understand it today is American science fiction author Hal Draper's short story, "MS Fnd in a Lbry" (1961).

The story prefigures the modern consequences of allowing metadata to become more important than the real data it is concerned with, and the risks inherent in that eventuality as a cautionary tale.