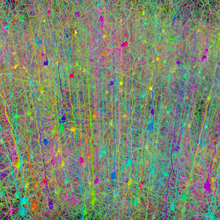

Neural network (biology)

Neural network theory has served to identify better how the neurons in the brain function and provide the basis for efforts to create artificial intelligence.

The preliminary theoretical base for contemporary neural networks was independently proposed by Alexander Bain[4] (1873) and William James[5] (1890).

The general scientific community at the time was skeptical of Bain's[4] theory because it required what appeared to be an inordinate number of neural connections within the brain.

James'[5] theory was similar to Bain's;[4] however, he suggested that memories and actions resulted from electrical currents flowing among the neurons in the brain.

The text by Rumelhart and McClelland[9] (1986) provided a full exposition on the use of connectionism in computers to simulate neural processes.

They showed that adding feedback connections between a resonance pair can support successful propagation of a single pulse packet throughout the entire network.

Scientists used a variety of statistical tools to infer the connectivity of a network based on the observed neuronal activities, i.e., spike trains.

[13] While initially research had been concerned mostly with the electrical characteristics of neurons, a particularly important part of the investigation in recent years has been the exploration of the role of neuromodulators such as dopamine, acetylcholine, and serotonin on behaviour and learning.

[citation needed] Biophysical models, such as BCM theory, have been important in understanding mechanisms for synaptic plasticity, and have had applications in both computer science and neuroscience.