Normal mapping

In 1978 Jim Blinn described how the normals of a surface could be perturbed to make geometrically flat faces have a detailed appearance.

In 1998, two papers were presented with key ideas for transferring details with normal maps from high to low polygon meshes: "Appearance Preserving Simplification", by Cohen et al. SIGGRAPH 1998,[4] and "A general method for preserving attribute values on simplified meshes" by Cignoni et al. IEEE Visualization '98.

[5] The former introduced the idea of storing surface normals directly in a texture, rather than displacements, though it required the low-detail model to be generated by a particular constrained simplification algorithm.

The latter presented a simpler approach that decouples the high and low polygonal mesh and allows the recreation of any attributes of the high-detail model (color, texture coordinates, displacements, etc.)

The combination of storing normals in a texture, with the more general creation process is still used by most currently available tools.

The orientation of coordinate axes differs depending on the space in which the normal map was encoded.

A straightforward implementation encodes normals in object space so that the red, green, and blue components correspond directly with the X, Y, and Z coordinates.

However, object-space normal maps cannot be easily reused on multiple models, as the orientation of the surfaces differs.

Tangent space normal maps can be identified by their dominant purple color, corresponding to a vector facing directly out from the surface.

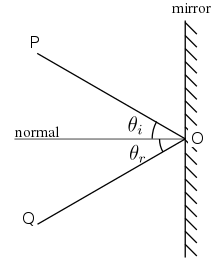

Surface normals are used in computer graphics primarily for the purposes of lighting, through mimicking a phenomenon called specular reflection.

Note that the light information is local, and so the surface does not necessarily need to be orientable as a whole.

In order to find the perturbation in the normal the tangent space must be correctly calculated.

[7] Most often the normal is perturbed in a fragment shader after applying the model and view matrices[citation needed].

[citation needed] It was later possible to perform normal mapping on high-end SGI workstations using multi-pass rendering and framebuffer operations[9] or on low end PC hardware with some tricks using paletted textures.

[10][11] Normal mapping's popularity for real-time rendering is due to its good quality to processing requirements ratio versus other methods of producing similar effects.

mipmapping), meaning that more distant surfaces require less complex lighting simulation.

Basic normal mapping can be implemented in any hardware that supports palettized textures.

The first game console to have specialized normal mapping hardware was the Sega Dreamcast.

The Nintendo 3DS has been shown to support normal mapping, as demonstrated by Resident Evil: Revelations and Metal Gear Solid 3: Snake Eater.