Numerical stability

One is numerical linear algebra and the other is algorithms for solving ordinary and partial differential equations by discrete approximation.

In numerical linear algebra, the principal concern is instabilities caused by proximity to singularities of various kinds, such as very small or nearly colliding eigenvalues.

On the other hand, in numerical algorithms for differential equations the concern is the growth of round-off errors and/or small fluctuations in initial data which might cause a large deviation of final answer from the exact solution.

Calculations that can be proven not to magnify approximation errors are called numerically stable.

One of the common tasks of numerical analysis is to try to select algorithms which are robust – that is to say, do not produce a wildly different result for a very small change in the input data.

The following definitions of forward, backward, and mixed stability are often used in numerical linear algebra.

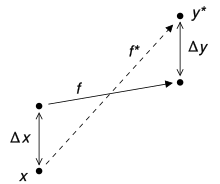

Consider the problem to be solved by the numerical algorithm as a function f mapping the data x to the solution y.

The forward error of the algorithm is the difference between the result and the solution; in this case, Δy = y* − y.

In other contexts, for instance when solving differential equations, a different definition of numerical stability is used.

An algorithm for solving a linear evolutionary partial differential equation is stable if the total variation of the numerical solution at a fixed time remains bounded as the step size goes to zero.

The Lax equivalence theorem states that an algorithm converges if it is consistent and stable (in this sense).

These results do not hold for nonlinear PDEs, where a general, consistent definition of stability is complicated by many properties absent in linear equations.

[note 1] A few iterations of each scheme are calculated in table form below, with initial guesses x0 = 1.4 and x0 = 1.42.