Orthogonality principle

In statistics and signal processing, the orthogonality principle is a necessary and sufficient condition for the optimality of a Bayesian estimator.

Loosely stated, the orthogonality principle says that the error vector of the optimal estimator (in a mean square error sense) is orthogonal to any possible estimator.

The orthogonality principle is most commonly stated for linear estimators, but more general formulations are possible.

Since the principle is a necessary and sufficient condition for optimality, it can be used to find the minimum mean square error estimator.

The orthogonality principle is most commonly used in the setting of linear estimation.

for some matrix H and vector c. Then, the orthogonality principle states that an estimator

achieves minimum mean square error if and only if If x and y have zero mean, then it suffices to require the first condition.

Suppose x is a Gaussian random variable with mean m and variance

where w is Gaussian noise which is independent of x and has mean 0 and variance

into the two requirements of the orthogonality principle, we obtain and Solving these two linear equations for h and c results in so that the linear minimum mean square error estimator is given by This estimator can be interpreted as a weighted average between the noisy measurements y and the prior expected value m. If the noise variance

is low compared with the variance of the prior

(corresponding to a high SNR), then most of the weight is given to the measurements y, which are deemed more reliable than the prior information.

Conversely, if the noise variance is relatively higher, then the estimate will be close to m, as the measurements are not reliable enough to outweigh the prior information.

Finally, note that because the variables x and y are jointly Gaussian, the minimum MSE estimator is linear.

be a Hilbert space of random variables with an inner product defined by

, representing the space of all possible estimators.

More accurately, one would like to minimize the mean squared error (MSE)

In the special case of linear estimators described above, the space

Other settings which can be formulated in this way include the subspace of causal linear filters and the subspace of all (possibly nonlinear) estimators.

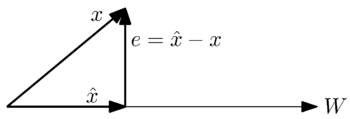

Geometrically, we can see this problem by the following simple case where

is a one-dimensional subspace: We want to find the closest approximation to the vector

More accurately, the general orthogonality principle states the following: Given a closed subspace

achieves minimum MSE among all elements in

Stated in such a manner, this principle is simply a statement of the Hilbert projection theorem.

The following is one way to find the minimum mean square error estimator by using the orthogonality principle.

as a linear combination of vectors in the subspace

By the orthogonality theorem, the square norm of the error vector,

, is minimized when, for all j, Developing this equation, we obtain If there is a finite number

, one can write this equation in matrix form as Assuming the

are linearly independent, the Gramian matrix can be inverted to obtain thus providing an expression for the coefficients