Markov decision process

[1] Originating from operations research in the 1950s,[2][3] MDPs have since gained recognition in a variety of fields, including ecology, economics, healthcare, telecommunications and reinforcement learning.

The MDP framework is designed to provide a simplified representation of key elements of artificial intelligence challenges.

These elements encompass the understanding of cause and effect, the management of uncertainty and nondeterminism, and the pursuit of explicit goals.

that will maximize some cumulative function of the random rewards, typically the expected discounted sum over a potentially infinite horizon: where

A lower discount factor motivates the decision maker to favor taking actions early, rather than postpone them indefinitely.

Because of the Markov property, it can be shown that the optimal policy is a function of the current state, as assumed above.

In such cases, a simulator can be used to model the MDP implicitly by providing samples from the transition distributions.

In this manner, trajectories of states, actions, and rewards, often called episodes may be produced.

[5] (Note that this is a different meaning from the term generative model in the context of statistical classification.)

Compared to an episodic simulator, a generative model has the advantage that it can yield data from any state, not only those encountered in a trajectory.

The type of model available for a particular MDP plays a significant role in determining which solution algorithms are appropriate.

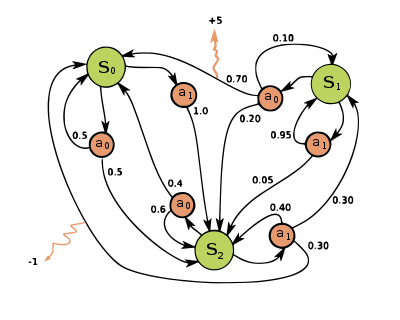

In this example, we have Solutions for MDPs with finite state and action spaces may be found through a variety of methods such as dynamic programming.

The algorithms in this section apply to MDPs with finite state and action spaces and explicitly given transition probabilities and reward functions, but the basic concepts may be extended to handle other problem classes, for example using function approximation.

will contain the discounted sum of the rewards to be earned (on average) by following that solution from state

As long as no state is permanently excluded from either of the steps, the algorithm will eventually arrive at the correct solution.

[7] In value iteration (Bellman 1957) harv error: no target: CITEREFBellman1957 (help), which is also called backward induction, the

Lloyd Shapley's 1953 paper on stochastic games included as a special case the value iteration method for MDPs,[8] but this was recognized only later on.

[10]) Instead of repeating step two to convergence, it may be formulated and solved as a set of linear equations.

[clarification needed] Thus, repeating step two to convergence can be interpreted as solving the linear equations by relaxation.

In this variant, the steps are preferentially applied to states which are in some way important – whether based on the algorithm (there were large changes in

Algorithms for finding optimal policies with time complexity polynomial in the size of the problem representation exist for finite MDPs.

In practice, online planning techniques such as Monte Carlo tree search can find useful solutions in larger problems, and, in theory, it is possible to construct online planning algorithms that can find an arbitrarily near-optimal policy with no computational complexity dependence on the size of the state space.

When this assumption is not true, the problem is called a partially observable Markov decision process or POMDP.

The only difference with the standard case stays in the fact that, due to the continuous nature of the time variable, the sum is replaced by an integral: where

Reinforcement learning is an interdisciplinary area of machine learning and optimal control that has, as main objective, finding an approximately optimal policy for MDPs where transition probabilities and rewards are unknown.

[19] Reinforcement learning can solve Markov-Decision processes without explicit specification of the transition probabilities which are instead needed to perform policy iteration.

In this setting, transition probabilities and rewards must be learned from experience, i.e. by letting an agent interact with the MDP for a given number of steps.

and then continuing optimally (or according to whatever policy one currently has): While this function is also unknown, experience during learning is based on

[22] At each time step t = 0,1,2,3,..., the automaton reads an input from its environment, updates P(t) to P(t + 1) by A, randomly chooses a successor state according to the probabilities P(t + 1) and outputs the corresponding action.

There are two main streams — one focuses on maximization problems from contexts like economics, using the terms action, reward, value, and calling the discount factor β or γ, while the other focuses on minimization problems from engineering and navigation[citation needed], using the terms control, cost, cost-to-go, and calling the discount factor α.