Probability theory

Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion).

Two major results in probability theory describing such behaviour are the law of large numbers and the central limit theorem.

As a mathematical foundation for statistics, probability theory is essential to many human activities that involve quantitative analysis of data.

[1] Methods of probability theory also apply to descriptions of complex systems given only partial knowledge of their state, as in statistical mechanics or sequential estimation.

[2] The modern mathematical theory of probability has its roots in attempts to analyze games of chance by Gerolamo Cardano in the sixteenth century, and by Pierre de Fermat and Blaise Pascal in the seventeenth century (for example the "problem of points").

[4] In the 19th century, what is considered the classical definition of probability was completed by Pierre Laplace.

[5] Initially, probability theory mainly considered discrete events, and its methods were mainly combinatorial.

Eventually, analytical considerations compelled the incorporation of continuous variables into the theory.

This culminated in modern probability theory, on foundations laid by Andrey Nikolaevich Kolmogorov.

This became the mostly undisputed axiomatic basis for modern probability theory; but, alternatives exist, such as the adoption of finite rather than countable additivity by Bruno de Finetti.

The measure theory-based treatment of probability covers the discrete, continuous, a mix of the two, and more.

Thus, the subset {1,3,5} is an element of the power set of the sample space of dice rolls.

A random variable is a function that assigns to each elementary event in the sample space a real number.

[8] In the case of a die, the assignment of a number to certain elementary events can be done using the identity function.

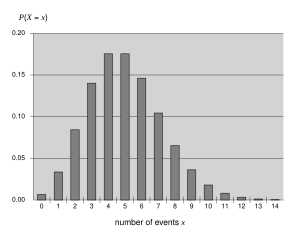

Discrete probability theory deals with events that occur in countable sample spaces.

Examples: Throwing dice, experiments with decks of cards, random walk, and tossing coins.

Classical definition: Initially the probability of an event to occur was defined as the number of cases favorable for the event, over the number of total outcomes possible in an equiprobable sample space: see Classical definition of probability.

Modern definition: The modern definition starts with a finite or countable set called the sample space, which relates to the set of all possible outcomes in classical sense, denoted by

Modern definition: If the sample space of a random variable X is the set of real numbers (

The utility of the measure-theoretic treatment of probability is that it unifies the discrete and the continuous cases, and makes the difference a question of which measure is used.

is the Borel σ-algebra on the set of real numbers, then there is a unique probability measure on

For example, to study Brownian motion, probability is defined on a space of functions.

Discrete densities are usually defined as this derivative with respect to a counting measure over the set of all possible outcomes.

Densities for absolutely continuous distributions are usually defined as this derivative with respect to the Lebesgue measure.

Certain random variables occur very often in probability theory because they well describe many natural or physical processes.

Modern probability theory provides a formal version of this intuitive idea, known as the law of large numbers.

Since it links theoretically derived probabilities to their actual frequency of occurrence in the real world, the law of large numbers is considered as a pillar in the history of statistical theory and has had widespread influence.

[9] The law of large numbers (LLN) states that the sample average of a sequence of independent and identically distributed random variables

It is in the different forms of convergence of random variables that separates the weak and the strong law of large numbers[10] It follows from the LLN that if an event of probability p is observed repeatedly during independent experiments, the ratio of the observed frequency of that event to the total number of repetitions converges towards p. For example, if

For example, the distributions with finite first, second, and third moment from the exponential family; on the other hand, for some random variables of the heavy tail and fat tail variety, it works very slowly or may not work at all: in such cases one may use the Generalized Central Limit Theorem (GCLT).