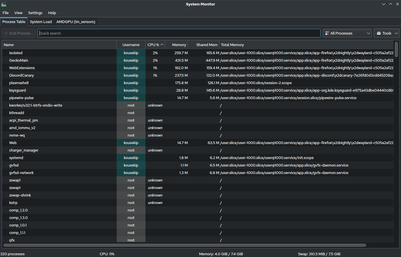

Process (computing)

Multitasking is a method to allow multiple processes to share processors (CPUs) and other system resources.

Depending on the operating system implementation, switches could be performed when tasks initiate and wait for completion of input/output operations, when a task voluntarily yields the CPU, on hardware interrupts, and when the operating system scheduler decides that a process has expired its fair share of CPU time (e.g, by the Completely Fair Scheduler of the Linux kernel).

A common form of multitasking is provided by CPU's time-sharing that is a method for interleaving the execution of users' processes and threads, and even of independent kernel tasks – although the latter feature is feasible only in preemptive kernels such as Linux.

Preemption has an important side effect for interactive processes that are given higher priority with respect to CPU bound processes, therefore users are immediately assigned computing resources at the simple pressing of a key or when moving a mouse.

In time-sharing systems, context switches are performed rapidly, which makes it seem like multiple processes are being executed simultaneously on the same processor.

The operating system may also provide mechanisms for inter-process communication to enable processes to interact in safe and predictable ways.

Not all parts of an executing program and its data have to be in physical memory for the associated process to be active.

Another example is a task that has been decomposed into cooperating but partially independent processes which can run simultaneously (i.e., using concurrency, or true parallelism – the latter model is a particular case of concurrent execution and is feasible whenever multiple CPU cores are available for the processes that are ready to run).

It is even possible for two or more processes to be running on different machines that may run different operating system (OS), therefore some mechanisms for communication and synchronization (called communications protocols for distributed computing) are needed (e.g., the Message Passing Interface {MPI}).

At first, more than one program ran on a single processor, as a result of underlying uniprocessor computer architecture, and they shared scarce and limited hardware resources; consequently, the concurrency was of a serial nature.

Scheduling , Preemption , Context Switching