Random-access memory

The use of semiconductor RAM dates back to 1965 when IBM introduced the monolithic (single-chip) 16-bit SP95 SRAM chip for their System/360 Model 95 computer, and Toshiba used bipolar DRAM memory cells for its 180-bit Toscal BC-1411 electronic calculator, both based on bipolar transistors.

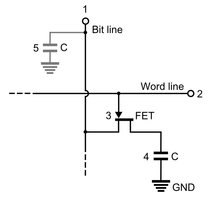

[4] In 1966, Dr. Robert Dennard invented modern DRAM architecture in which there's a single MOS transistor per capacitor.

Early computers used relays, mechanical counters[6] or delay lines for main memory functions.

Latches built out of triode vacuum tubes, and later, out of discrete transistors, were used for smaller and faster memories such as registers.

Since the electron beam of the CRT could read and write the spots on the tube in any order, memory was random access.

Since every ring had a combination of address wires to select and read or write it, access to any memory location in any sequence was possible.

[10] Integrated bipolar static random-access memory (SRAM) was invented by Robert H. Norman at Fairchild Semiconductor in 1963.

Data was stored in the tiny capacitance of each transistor and had to be periodically refreshed every few milliseconds before the charge could leak away.

[22] In 1966, Robert Dennard invented modern DRAM architecture for which there is a single MOS transistor per capacitor.

[18] In 1967, Dennard filed a patent under IBM for a single-transistor DRAM memory cell, based on MOS technology.

[23] The first commercial DRAM IC chip was the Intel 1103, which was manufactured on an 8 μm MOS process with a capacity of 1 kbit, and was released in 1970.

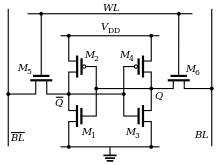

In SRAM, a bit of data is stored using the state of a six-transistor memory cell, typically using six MOSFETs.

This form of RAM is more expensive to produce, but is generally faster and requires less dynamic power than DRAM.

Both static and dynamic RAM are considered volatile, as their state is lost or reset when power is removed from the system.

In optical storage, the term DVD-RAM is somewhat of a misnomer since, it is not random access; it behaves much like a hard disc drive if somewhat slower.

Because of this refresh process, DRAM uses more power, but it can achieve greater storage densities and lower unit costs compared to SRAM.

Even within a hierarchy level such as DRAM, the specific row, column, bank, rank, channel, or interleave organization of the components make the access time variable, although not to the extent that access time to rotating storage media or a tape is variable.

As suggested above, smaller amounts of RAM (mostly SRAM) are also integrated in the CPU and other ICs on the motherboard, as well as in hard-drives, CD-ROMs, and several other parts of the computer system.

In addition to serving as temporary storage and working space for the operating system and applications, RAM is used in numerous other ways.

Most modern operating systems employ a method of extending RAM capacity, known as "virtual memory".

Excessive use of this mechanism results in thrashing and generally hampers overall system performance, mainly because hard drives are far slower than RAM.

Sometimes, the contents of a relatively slow ROM chip are copied to read/write memory to allow for shorter access times.

The ROM chip is then disabled while the initialized memory locations are switched in on the same block of addresses (often write-protected).

Originally, PCs contained less than 1 mebibyte of RAM, which often had a response time of 1 CPU clock cycle, meaning that it required 0 wait states.

Constructing a memory unit of many gibibytes with a response time of one clock cycle is difficult or impossible.

Today's CPUs often still have a mebibyte of 0 wait state cache memory, but it resides on the same chip as the CPU cores due to the bandwidth limitations of chip-to-chip communication.

Third, for certain applications, traditional serial architectures are becoming less efficient as processors get faster (due to the so-called von Neumann bottleneck), further undercutting any gains that frequency increases might otherwise buy.

In addition, partly due to limitations in the means of producing inductance within solid state devices, resistance-capacitance (RC) delays in signal transmission are growing as feature sizes shrink, imposing an additional bottleneck that frequency increases don't address.The RC delays in signal transmission were also noted in "Clock Rate versus IPC: The End of the Road for Conventional Microarchitectures"[38] which projected a maximum of 12.5% average annual CPU performance improvement between 2000 and 2014.

A different concept is the processor-memory performance gap, which can be addressed by 3D integrated circuits that reduce the distance between the logic and memory aspects that are further apart in a 2D chip.

[42] Solid-state hard drives have continued to increase in speed, from ~400 Mbit/s via SATA3 in 2012 up to ~7 GB/s via NVMe/PCIe in 2024, closing the gap between RAM and hard disk speeds, although RAM continues to be an order of magnitude faster, with single-lane DDR5 8000MHz capable of 128 GB/s, and modern GDDR even faster.