Reed–Solomon error correction

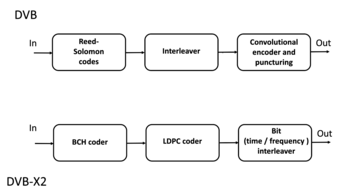

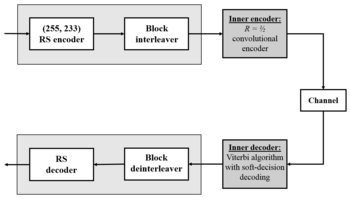

[1] They have many applications, including consumer technologies such as MiniDiscs, CDs, DVDs, Blu-ray discs, QR codes, Data Matrix, data transmission technologies such as DSL and WiMAX, broadcast systems such as satellite communications, DVB and ATSC, and storage systems such as RAID 6.

Reed–Solomon codes operate on a block of data treated as a set of finite-field elements called symbols.

Reed–Solomon codes were developed in 1960 by Irving S. Reed and Gustave Solomon, who were then staff members of MIT Lincoln Laboratory.

In 1975, another improved BCH scheme decoder was developed by Yasuo Sugiyama, based on the extended Euclidean algorithm.

The first commercial application in mass-produced consumer products appeared in 1982 with the compact disc, where two interleaved Reed–Solomon codes are used.

In 2002, another original scheme decoder was developed by Shuhong Gao, based on the extended Euclidean algorithm.

[6] Reed–Solomon coding is very widely used in mass storage systems to correct the burst errors associated with media defects.

It was the first use of strong error correction coding in a mass-produced consumer product, and DAT and DVD use similar schemes.

The result is a CIRC that can completely correct error bursts up to 4000 bits, or about 2.5 mm on the disc surface.

Specialized forms of Reed–Solomon codes, specifically Cauchy-RS and Vandermonde-RS, can be used to overcome the unreliable nature of data transmission over erasure channels.

Reed–Solomon codes are also used in xDSL systems and CCSDS's Space Communications Protocol Specifications as a form of forward error correction.

In the original view of Reed & Solomon (1960), every codeword of the Reed–Solomon code is a sequence of function values of a polynomial of degree less than

In order to obtain a codeword of the Reed–Solomon code, the message symbols (each within the q-sized alphabet) are treated as the coefficients of a polynomial

This trade-off between the relative distance and the rate is asymptotically optimal since, by the Singleton bound, every code satisfies

Sometimes error locations are known in advance (e.g., "side information" in demodulator signal-to-noise ratios)—these are called erasures.

The "missing" bits in a shortened code need to be filled by either zeros or ones, depending on whether the data is complemented or not.

In the original view of Reed and Solomon, where the codewords are the values of a polynomial, one can choose the sequence of evaluation points in such a way as to make the code cyclic.

So choosing a sequence of primitive root powers as the evaluation points makes the original view Reed–Solomon code cyclic.

Consequently, the problem is finding the Xk, because then the leftmost matrix would be known, and both sides of the equation could be multiplied by its inverse, yielding Yk In the variant of this algorithm where the locations of the errors are already known (when it is being used as an erasure code), this is the end.

The error locations (Xk) are already known by some other method (for example, in an FM transmission, the sections where the bitstream was unclear or overcome with interference are probabilistically determinable from frequency analysis).

During each iteration, it calculates a discrepancy based on a current instance of Λ(x) with an assumed number of errors e:

Using the same data as the Peterson Gorenstein Zierler example above: The final value of C is the error locator polynomial, Λ(x).

The extended Euclidean algorithm can find a series of polynomials of the form where the degree of R decreases as i increases.

The Singleton bound states that the minimum distance d of a linear block code of size (n,k) is upper-bounded by n − k + 1.

In 2023, building on three exciting works,[17][18][19] coding theorists showed that Reed-Solomon codes defined over random evaluation points can actually achieve list decoding capacity (up to n−k errors) over linear size alphabets with high probability.

The advent of LDPC and turbo codes, which employ iterated soft-decision belief propagation decoding methods to achieve error-correction performance close to the theoretical limit, has spurred interest in applying soft-decision decoding to conventional algebraic codes.

In 2003, Ralf Koetter and Alexander Vardy presented a polynomial-time soft-decision algebraic list-decoding algorithm for Reed–Solomon codes, which was based upon the work by Sudan and Guruswami.

Reed & Solomon (1960) described a theoretical decoder that corrected errors by finding the most popular message polynomial.

A decoding procedure could use a method like Lagrange interpolation on various subsets of n codeword values taken k at a time to repeatedly produce potential polynomials, until a sufficient number of matching polynomials are produced to reasonably eliminate any errors in the received codeword.

Using RS(7,3), GF(929), and the set of evaluation points ai = i − 1 If the message polynomial is The codeword is Errors in transmission might cause this to be received instead.