Renormalization

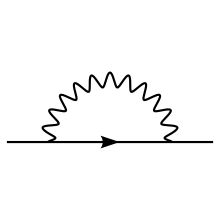

In quantum field theory a cloud of virtual particles, such as photons, positrons, and others surrounds and interacts with the initial electron.

To define them, or make them unambiguous, a continuum limit must carefully remove "construction scaffolding" of lattices at various scales.

Renormalization procedures are based on the requirement that certain physical quantities (such as the mass and charge of an electron) equal observed (experimental) values.

That is, the experimental value of the physical quantity yields practical applications, but due to their empirical nature the observed measurement represents areas of quantum field theory that require deeper derivation from theoretical bases.

Renormalization was first developed in quantum electrodynamics (QED) to make sense of infinite integrals in perturbation theory.

All scales are linked in a broadly systematic way, and the actual physics pertinent to each is extracted with the suitable specific computational techniques appropriate for each.

Renormalization is distinct from regularization, another technique to control infinities by assuming the existence of new unknown physics at new scales.

[citation needed] This was called renormalization, and Lorentz and Abraham attempted to develop a classical theory of the electron this way.

When developing quantum electrodynamics in the 1930s, Max Born, Werner Heisenberg, Pascual Jordan, and Paul Dirac discovered that in perturbative corrections many integrals were divergent (see The problem of infinities).

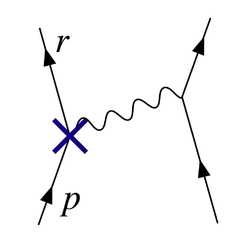

The electron on the left side of the diagram, represented by the solid line, starts out with 4-momentum pμ and ends up with 4-momentum rμ.

If the theory is renormalizable (see below for more on this), as it is in QED, the divergent parts of loop diagrams can all be decomposed into pieces with three or fewer legs, with an algebraic form that can be canceled out by the second term (or by the similar counterterms that come from Z0 and Z3).

[22] According to such renormalization group insights, detailed in the next section, this splitting is unnatural and actually unphysical, as all scales of the problem enter in continuous systematic ways.

To minimize the contribution of loop diagrams to a given calculation (and therefore make it easier to extract results), one chooses a renormalization point close to the energies and momenta exchanged in the interaction.

Colloquially, particle physicists often speak of certain physical "constants" as varying with the energy of interaction, though in fact, it is the renormalization scale that is the independent quantity.

Choosing an increasing energy scale and using the renormalization group makes this clear from simple Feynman diagrams; were this not done, the prediction would be the same, but would arise from complicated high-order cancellations.

An essentially arbitrary modification to the loop integrands, or regulator, can make them drop off faster at high energies and momenta, in such a manner that the integrals converge.

One of the most popular in modern use is dimensional regularization, invented by Gerardus 't Hooft and Martinus J. G. Veltman,[23] which tames the integrals by carrying them into a space with a fictitious fractional number of dimensions.

Freeman Dyson argued that these infinities are of a basic nature and cannot be eliminated by any formal mathematical procedures, such as the renormalization method.

Despite his crucial role in the development of quantum electrodynamics, he wrote the following in 1985:[28] Feynman was concerned that all field theories known in the 1960s had the property that the interactions become infinitely strong at short enough distance scales.

Beginning in the 1970s, however, inspired by work on the renormalization group and effective field theory, and despite the fact that Dirac and various others—all of whom belonged to the older generation—never withdrew their criticisms, attitudes began to change, especially among younger theorists.

Kenneth G. Wilson and others demonstrated that the renormalization group is useful in statistical field theory applied to condensed matter physics, where it provides important insights into the behavior of phase transitions.

At the colossal energy scale of 1015 GeV (far beyond the reach of our current particle accelerators), they all become approximately the same size (Grotz and Klapdor 1990, p. 254), a major motivation for speculations about grand unified theory.

Instead of being only a worrisome problem, renormalization has become an important theoretical tool for studying the behavior of field theories in different regimes.

As Lewis Ryder has put it, "In the Quantum Theory, these [classical] divergences do not disappear; on the contrary, they appear to get worse.

Not all theories lend themselves to renormalization in the manner described above, with a finite supply of counterterms and all quantities becoming cutoff-independent at the end of the calculation.

If the Lagrangian contains combinations of field operators of high enough dimension in energy units, the counterterms required to cancel all divergences proliferate to infinite number, and, at first glance, the theory would seem to gain an infinite number of free parameters and therefore lose all predictive power, becoming scientifically worthless.

The Standard Model of particle physics contains only renormalizable operators, but the interactions of general relativity become nonrenormalizable operators if one attempts to construct a field theory of quantum gravity in the most straightforward manner (treating the metric in the Einstein–Hilbert Lagrangian as a perturbation about the Minkowski metric), suggesting that perturbation theory is not satisfactory in application to quantum gravity.

In nonrenormalizable effective field theory, terms in the Lagrangian do multiply to infinity, but have coefficients suppressed by ever-more-extreme inverse powers of the energy cutoff.

Nonrenormalizable interactions in effective field theories rapidly become weaker as the energy scale becomes much smaller than the cutoff.

This approach covered the conceptual point and was given full computational substance[22] in the extensive important contributions of Kenneth Wilson.