Selection algorithm

Selection includes as special cases the problems of finding the minimum, median, and maximum element in the collection.

For data that is already structured, faster algorithms may be possible; as an extreme case, selection in an already-sorted array takes time

An algorithm for the selection problem takes as input a collection of values, and a number

For this to be well-defined, it should be possible to sort the values into an order from smallest to largest; for instance, they may be integers, floating-point numbers, or some other kind of object with a numeric key.

Nevertheless, the simplicity of this approach makes it attractive, especially when a highly-optimized sorting routine is provided as part of a runtime library, but a selection algorithm is not.

For inputs of moderate size, sorting can be faster than non-random selection algorithms, because of the smaller constant factors in its running time.

[4] This method also produces a sorted version of the collection, which may be useful for other later computations, and in particular for selection with other choices of

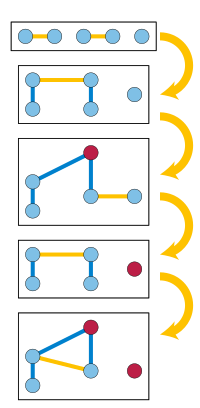

One possible design of a consolation bracket in a single-elimination tournament, in which the teams who lost to the eventual winner play another mini-tournament to determine second place, can be seen as an instance of this method.

It can be found by applying a selection algorithm recursively, seeking the value in this position in

may be done by making new collections for these sets, or by a method that partitions a given list or array data type in-place.

, are based on the concept of factories, introduced in 1976 by Arnold Schönhage, Mike Paterson, and Nick Pippenger.

[19] Parallel algorithms for selection have been studied since 1975, when Leslie Valiant introduced the parallel comparison tree model for analyzing these algorithms, and proved that in this model selection using a linear number of comparisons requires

[24] With concurrent memory access, slightly faster parallel time is possible in general,[25] and the

This method of performing selection in a heap has been applied to problems of listing multiple solutions to combinatorial optimization problems, such as finding the k shortest paths in a weighted graph, by defining a state space of solutions in the form of an implicitly defined heap-ordered tree, and then applying this selection algorithm to this tree.

[28] Beyond this simple argument, there has been a significant amount of research on the exact number of comparisons needed for selection, both in the randomized and deterministic cases.

After several incorrect attempts, the first tight lower bound on this case was published in 1964 by Soviet mathematician Sergey Kislitsyn.

It can be shown by observing that selecting the second-smallest also requires distinguishing the smallest value from the rest, and by considering the number

(subject to consistency with at least one possible ordering) rather than by the numerical values of the given items, shows that it is possible to force

The argument is made directly for deterministic algorithms, with a number of comparisons that is averaged over all possible permutations of the input values.

[1] By Yao's principle, it also applies to the expected number of comparisons for a randomized algorithm on its worst-case input.

[35] The special case of median-finding has a slightly larger lower bound on the number of comparisons, at least

th smallest requires exactly the same number of comparisons, in the worst case, as selecting the

[14][38] Very few languages have built-in support for general selection, although many provide facilities for finding the smallest or largest element of a list.

[3] Python's standard library includes heapq.nsmallest and heapq.nlargest functions for returning the smallest or largest elements from a collection, in sorted order.

In average cases, there are likely to be few heap updates and most input elements are processed with only a single comparison.

For example, extracting the 100 largest or smallest values out of 10,000,000 random inputs makes 10,009,401 comparisons on average.

[39] Since 2017, Matlab has included maxk() and mink() functions, which return the maximal (minimal)

[40] Quickselect was presented without analysis by Tony Hoare in 1965,[41] and first analyzed in a 1971 technical report by Donald Knuth.

[11] The first known linear time deterministic selection algorithm is the median of medians method, published in 1973 by Manuel Blum, Robert W. Floyd, Vaughan Pratt, Ron Rivest, and Robert Tarjan.

[5] They trace the formulation of the selection problem to work of Charles L. Dodgson (better known as Lewis Carroll) who in 1883 pointed out that the usual design of single-elimination sports tournaments does not guarantee that the second-best player wins second place,[5][42] and to work of Hugo Steinhaus circa 1930, who followed up this same line of thought by asking for a tournament design that can make this guarantee, with a minimum number of games played (that is, comparisons).