Weak supervision

Weak supervision (also known as semi-supervised learning) is a paradigm in machine learning, the relevance and notability of which increased with the advent of large language models due to large amount of data required to train them.

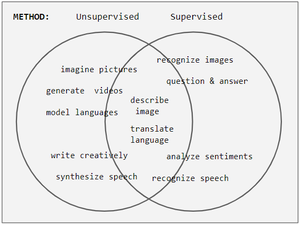

It is characterized by using a combination of a small amount of human-labeled data (exclusively used in more expensive and time-consuming supervised learning paradigm), followed by a large amount of unlabeled data (used exclusively in unsupervised learning paradigm).

In other words, the desired output values are provided only for a subset of the training data.

In the transductive setting, these unsolved problems act as exam questions.

The acquisition of labeled data for a learning problem often requires a skilled human agent (e.g. to transcribe an audio segment) or a physical experiment (e.g. determining the 3D structure of a protein or determining whether there is oil at a particular location).

[1] The goal of transductive learning is to infer the correct labels for the given unlabeled data

It is unnecessary (and, according to Vapnik's principle, imprudent) to perform transductive learning by way of inferring a classification rule over the entire input space; however, in practice, algorithms formally designed for transduction or induction are often used interchangeably.

Semi-supervised learning algorithms make use of at least one of the following assumptions:[2] Points that are close to each other are more likely to share a label.

This is also generally assumed in supervised learning and yields a preference for geometrically simple decision boundaries.

In the case of semi-supervised learning, the smoothness assumption additionally yields a preference for decision boundaries in low-density regions, so few points are close to each other but in different classes.

This is a special case of the smoothness assumption and gives rise to feature learning with clustering algorithms.

The data lie approximately on a manifold of much lower dimension than the input space.

In this case learning the manifold using both the labeled and unlabeled data can avoid the curse of dimensionality.

The manifold assumption is practical when high-dimensional data are generated by some process that may be hard to model directly, but which has only a few degrees of freedom.

[5] The transductive learning framework was formally introduced by Vladimir Vapnik in the 1970s.

The parameter is then chosen based on fit to both the labeled and unlabeled data, weighted by

: Another major class of methods attempts to place boundaries in regions with few data points (labeled or unlabeled).

One of the most commonly used algorithms is the transductive support vector machine, or TSVM (which, despite its name, may be used for inductive learning as well).

by minimizing the regularized empirical risk: An exact solution is intractable due to the non-convex term

[9] Other approaches that implement low-density separation include Gaussian process models, information regularization, and entropy minimization (of which TSVM is a special case).

Graph-based methods for semi-supervised learning use a graph representation of the data, with a node for each labeled and unlabeled example.

The graph may be constructed using domain knowledge or similarity of examples; two common methods are to connect each data point to its

A term is added to the standard Tikhonov regularization problem to enforce smoothness of the solution relative to the manifold (in the intrinsic space of the problem) as well as relative to the ambient input space.

, we have The graph-based approach to Laplacian regularization is to put in relation with finite difference method.

may inform a choice of representation, distance metric, or kernel for the data in an unsupervised first step.

This classifier is then applied to the unlabeled data to generate more labeled examples as input for the supervised learning algorithm.

[16] Co-training is an extension of self-training in which multiple classifiers are trained on different (ideally disjoint) sets of features and generate labeled examples for one another.

[17] Human responses to formal semi-supervised learning problems have yielded varying conclusions about the degree of influence of the unlabeled data.

Much of human concept learning involves a small amount of direct instruction (e.g. parental labeling of objects during childhood) combined with large amounts of unlabeled experience (e.g. observation of objects without naming or counting them, or at least without feedback).

Human infants are sensitive to the structure of unlabeled natural categories such as images of dogs and cats or male and female faces.