Visual perception

The major problem in visual perception is that what people see is not simply a translation of retinal stimuli (i.e., the image on the retina), with the brain altering the basic information taken in.

Thus people interested in perception have long struggled to explain what visual processing does to create what is actually seen.

The second school advocated the so-called 'intromission' approach which sees vision as coming from something entering the eyes representative of the object.

Plato makes this assertion in his dialogue Timaeus (45b and 46b), as does Empedocles (as reported by Aristotle in his De Sensu, DK frag.

He proposed the brain was making assumptions and conclusions from incomplete data, based on previous experiences.

Another type of unconscious inference hypothesis (based on probabilities) has recently been revived in so-called Bayesian studies of visual perception.

[13] Proponents of this approach consider that the visual system performs some form of Bayesian inference to derive a perception from sensory data.

However, it is not clear how proponents of this view derive, in principle, the relevant probabilities required by the Bayesian equation.

[citation needed] Gestalt psychologists working primarily in the 1930s and 1940s raised many of the research questions that are studied by vision scientists today.

"Gestalt" is a German word that partially translates to "configuration or pattern" along with "whole or emergent structure".

According to this theory, there are eight main factors that determine how the visual system automatically groups elements into patterns: Proximity, Similarity, Closure, Symmetry, Common Fate (i.e. common motion), Continuity as well as Good Gestalt (pattern that is regular, simple, and orderly) and Past Experience.

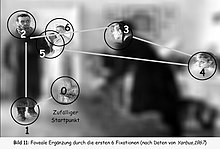

[citation needed] During the 1960s, technical development permitted the continuous registration of eye movement during reading,[17] in picture viewing,[18] and later, in visual problem solving,[19] and when headset-cameras became available, also during driving.

While the background is out of focus, representing the peripheral vision, the first eye movement goes to the boots of the man (just because they are very near the starting fixation and have a reasonable contrast).

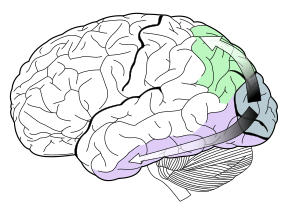

Using fMRI and electrophysiology Doris Tsao and colleagues described brain regions and a mechanism for face recognition in macaque monkeys.

[27] By selectively shutting off neural activity of many small areas of the cortex, the animal gets alternately unable to distinguish between certain particular pairments of objects.

[30] Studies of people whose sight has been restored after a long blindness reveal that they cannot necessarily recognize objects and faces (as opposed to color, motion, and simple geometric shapes).

Some hypothesize that being blind during childhood prevents some part of the visual system necessary for these higher-level tasks from developing properly.

[31] The general belief that a critical period lasts until age 5 or 6 was challenged by a 2007 study that found that older patients could improve these abilities with years of exposure.

Marr described vision as proceeding from a two-dimensional visual array (on the retina) to a three-dimensional description of the world as output.

It is not clear how a preliminary depth map could, in principle, be constructed, nor how this would address the question of figure-ground organization, or grouping.

[37] A more recent, alternative framework proposes that vision is composed instead of the following three stages: encoding, selection, and decoding.

The cones are responsible for color perception and are of three distinct types labeled red, green, and blue.

[40] Photoreceptors contain within them a special chemical called a photopigment, which is embedded in the membrane of the lamellae; a single human rod contains approximately 10 million of them.

When the appropriate wavelengths (those that the specific photopigment is sensitive to) hit the photoreceptor, the photopigment splits into two, which sends a signal to the bipolar cell layer, which in turn sends a signal to the ganglion cells, the axons of which form the optic nerve and transmit the information to the brain.

The brain interprets different colors (and with a lot of information, an image) when the rate of firing of these neurons alters.

Special hardware structures and software algorithms provide machines with the capability to interpret the images coming from a camera or a sensor.