Social bot

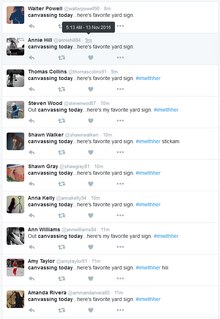

The messages (e.g. tweets) it distributes can be simple and operate in groups and various configurations with partial human control (hybrid) via algorithm.

Social bots can also use artificial intelligence and machine learning to express messages in more natural human dialogue.

Social bots, besides being able to (re-)produce or reuse messages autonomously, also share many traits with spambots concerning their tendency to infiltrate large user groups.

Using social bots is against the terms of service of many platforms, such as Twitter and Instagram, although it is allowed to some degree by others, such as Reddit and Discord.

Social bots are expected to play a role in the future shaping of public opinion by autonomously acting as incessant influencers.

[17] One method is the use of Benford's Law for predicting the frequency distribution of significant leading digits to detect malicious bots online.

[24] Due to increased security on the platform and the detection methods used by Instagram, some botting companies are reporting issues with their services because Instagram imposes interaction limit thresholds based on past and current app usage, and many payment and email platforms deny the companies access to their services, preventing potential clients from being able to purchase them.

[27] Some bots are used to automate scheduled tweets, download videos, set reminders and send warnings of natural disasters.

[29] In 2025, Meta announced it would be creating an AI product that helps users create AI characters on Instagram and Facebook, allowing these characters to have bios, profile pictures, generate and share "AI-powered content" on the platforms.

[36] Its creator was Michael Sayman, a former product lead at Google who also worked at Facebook, Roblox, and Twitter.