Statistical learning theory

Statistical learning theory is a framework for machine learning drawing from the fields of statistics and functional analysis.

[1][2][3] Statistical learning theory deals with the statistical inference problem of finding a predictive function based on data.

Statistical learning theory has led to successful applications in fields such as computer vision, speech recognition, and bioinformatics.

Every point in the training is an input–output pair, where the input maps to an output.

The learning problem consists of inferring the function that maps between the input and the output, such that the learned function can be used to predict the output from future input.

If the output takes a continuous range of values, it is a regression problem.

Using Ohm's law as an example, a regression could be performed with voltage as input and current as an output.

The regression would find the functional relationship between voltage and current to be

Classification problems are those for which the output will be an element from a discrete set of labels.

Classification is very common for machine learning applications.

In facial recognition, for instance, a picture of a person's face would be the input, and the output label would be that person's name.

After learning a function based on the training set data, that function is validated on a test set of data, data that did not appear in the training set.

Statistical learning theory takes the perspective that there is some unknown probability distribution over the product space

In this formalism, the inference problem consists of finding a function

be the loss function, a metric for the difference between the predicted value

is unknown, a proxy measure for the expected risk must be used.

This measure is based on the training set, a sample from this unknown probability distribution.

The loss function also affects the convergence rate for an algorithm.

[5] Different loss functions are used depending on whether the problem is one of regression or one of classification.

This familiar loss function is used in Ordinary Least Squares regression.

Because learning is a prediction problem, the goal is not to find a function that most closely fits the (previously observed) data, but to find one that will most accurately predict output from future input.

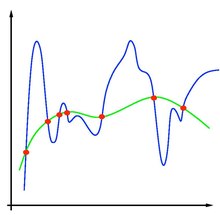

Empirical risk minimization runs this risk of overfitting: finding a function that matches the data exactly but does not predict future output well.

Overfitting is symptomatic of unstable solutions; a small perturbation in the training set data would cause a large variation in the learned function.

Regularization can be accomplished by restricting the hypothesis space

Restriction of the hypothesis space avoids overfitting because the form of the potential functions are limited, and so does not allow for the choice of a function that gives empirical risk arbitrarily close to zero.

Tikhonov regularization ensures existence, uniqueness, and stability of the solution.

We can apply Hoeffding's inequality to bound the probability that the empirical risk deviates from the true risk to be a Sub-Gaussian distribution.

But generally, when we do empirical risk minimization, we are not given a classifier; we must choose it.

Therefore, a more useful result is to bound the probability of the supremum of the difference over the whole class.

The exponential term comes from Hoeffding but there is an extra cost of taking the supremum over the whole class, which is the shattering number.