String (computer science)

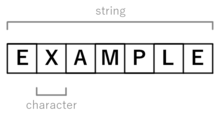

In computer programming, a string is traditionally a sequence of characters, either as a literal constant or as some kind of variable.

String may also denote more general arrays or other sequence (or list) data types and structures.

Depending on the programming language and precise data type used, a variable declared to be a string may either cause storage in memory to be statically allocated for a predetermined maximum length or employ dynamic allocation to allow it to hold a variable number of elements.

[1] In formal languages, which are used in mathematical logic and theoretical computer science, a string is a finite sequence of symbols that are chosen from a set called an alphabet.

[4] Use of the word "string" to mean any items arranged in a line, series or succession dates back centuries.

Logographic languages such as Chinese, Japanese, and Korean (known collectively as CJK) need far more than 256 characters (the limit of a one 8-bit byte per-character encoding) for reasonable representation.

Use of these with existing code led to problems with matching and cutting of strings, the severity of which depended on how the character encoding was designed.

Unicode's preferred byte stream format UTF-8 is designed not to have the problems described above for older multibyte encodings.

A lot of high-level languages provide strings as a primitive data type, such as JavaScript and PHP, while most others provide them as a composite data type, some with special language support in writing literals, for example, Java and C#.

Older string implementations were designed to work with repertoire and encoding defined by ASCII, or more recent extensions like the ISO 8859 series.

The principal difference is that, with certain encodings, a single logical character may take up more than one entry in the array.

This representation of an n-character string takes n + 1 space (1 for the terminator), and is thus an implicit data structure.

Using a special byte other than null for terminating strings has historically appeared in both hardware and software, though sometimes with a value that was also a printing character.

Somewhat similar, "data processing" machines like the IBM 1401 used a special word mark bit to delimit strings at the left, where the operation would start at the right.

This meant that, while the IBM 1401 had a seven-bit word, almost no-one ever thought to use this as a feature, and override the assignment of the seventh bit to (for example) handle ASCII codes.

Early microcomputer software relied upon the fact that ASCII codes do not use the high-order bit, and set it to indicate the end of a string.

To avoid such limitations, improved implementations of P-strings use 16-, 32-, or 64-bit words to store the string length.

It is possible to create data structures and functions that manipulate them that do not have the problems associated with character termination and can in principle overcome length code bounds.

The core data structure in a text editor is the one that manages the string (sequence of characters) that represents the current state of the file being edited.

While that state could be stored in a single long consecutive array of characters, a typical text editor instead uses an alternative representation as its sequence data structure—a gap buffer, a linked list of lines, a piece table, or a rope—which makes certain string operations, such as insertions, deletions, and undoing previous edits, more efficient.

Performing limited or no validation of user input can cause a program to be vulnerable to code injection attacks.

Sometimes, strings need to be embedded inside a text file that is both human-readable and intended for consumption by a machine.

In this case, the NUL character does not work well as a terminator since it is normally invisible (non-printable) and is difficult to input via a keyboard.

This data may or may not be represented by a string-specific datatype, depending on the needs of the application, the desire of the programmer, and the capabilities of the programming language being used.

Using C string handling functions on such an array of characters often seems to work, but later leads to security problems.

The name stringology was coined in 1984 by computer scientist Zvi Galil for the theory of algorithms and data structures used for string processing.

Some APIs like Multimedia Control Interface, embedded SQL or printf use strings to hold commands that will be interpreted.

Many scripting programming languages, including Perl, Python, Ruby, and Tcl employ regular expressions to facilitate text operations.

Some microprocessor's instruction set architectures contain direct support for string operations, such as block copy (e.g.

For the example alphabet, the shortlex order is ε < 0 < 1 < 00 < 01 < 10 < 11 < 000 < 001 < 010 < 011 < 100 < 101 < 0110 < 111 < 0000 < 0001 < 0010 < 0011 < ... < 1111 < 00000 < 00001 ... A number of additional operations on strings commonly occur in the formal theory.