Graphics card

Applications of general-purpose computing on graphics cards include AI training, cryptocurrency mining, and molecular simulation.

In the late 1980s, advancements in personal computing led companies like Radius to develop specialized graphics cards for the Apple Macintosh II.

The evolution of graphics processing took a major leap forward in the mid-1990s with 3dfx Interactive's introduction of the Voodoo series, one of the earliest consumer-facing GPUs that supported 3D acceleration.

This innovation simplified the hardware requirements for end-users, as they no longer needed separate cards for 2D and 3D rendering, thus paving the way for the widespread adoption of more powerful and versatile GPUs in personal computers.

In contemporary times, the majority of graphics cards are built using chips sourced from two dominant manufacturers: AMD and Nvidia.

Additionally, many graphics cards now have integrated sound capabilities, allowing them to transmit audio alongside video output to connected TVs or monitors with built-in speakers, further enhancing the multimedia experience.

[7] The term "AIB" emphasizes the modular nature of these components, as they are typically added to a computer's motherboard to enhance its graphical capabilities.

The evolution from the early days of separate 2D and 3D cards to today's integrated and multifunctional GPUs reflects the ongoing technological advancements and the increasing demand for high-quality visual and multimedia experiences in computing.

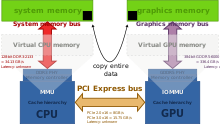

On the other hand, a graphics card has a separate random access memory (RAM), cooling system, and dedicated power regulators.

[8][9] Both AMD and Intel have introduced CPUs and motherboard chipsets which support the integration of a GPU into the same die as the CPU.

[11] When tested with video games, the GeForce RTX 2080 Ti Founder's Edition averaged 300 watts of power consumption.

Larger configurations use water solutions or immersion cooling to achieve proper performance without thermal throttling.

[28] SLI and Crossfire have become increasingly uncommon as most games do not fully utilize multiple GPUs, due to the fact that most users cannot afford them.

[29][30][31] Multiple GPUs are still used on supercomputers (like in Summit), on workstations to accelerate video[32][33][34] and 3D rendering,[35][36][37][38][39] visual effects,[40][41] for simulations,[42] and for training artificial intelligence.

A graphics driver usually supports one or multiple cards by the same vendor and has to be written for a specific operating system.

Additionally, the operating system or an extra software package may provide certain programming APIs for applications to perform 3D rendering.

At the same time, graphics card sales have grown within the high-end segment, as manufacturers have shifted their focus to prioritize the gaming and enthusiast market.

[45][46] Beyond the gaming and multimedia segments, graphics cards have been increasingly used for general-purpose computing, such as big data processing.

In January 2018, mid- to high-end graphics cards experienced a major surge in price, with many retailers having stock shortages due to the significant demand among this market.

These include: A graphics processing unit (GPU), also occasionally called visual processing unit (VPU), is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the building of images in a frame buffer intended for output to a display.

[citation needed] The video BIOS or firmware contains a minimal program for the initial set up and control of the graphics card.

It does not support YUV to RGB translation, video scaling, pixel copying, compositing or any of the multitude of other 2D and 3D features of the graphics card, which must be accessed by software drivers.

[citation needed] Video memory may be used for storing other data as well as the screen image, such as the Z-buffer, which manages the depth coordinates in 3D graphics, as well as textures, vertex buffers, and compiled shader programs.

[citation needed]) Due to the growing popularity of digital computer displays and the integration of the RAMDAC onto the GPU die, it has mostly disappeared as a discrete component.

These require a RAMDAC, but they reconvert the analog signal back to digital before they can display it, with the unavoidable loss of quality stemming from this digital-to-analog-to-digital conversion.

[citation needed] With the VGA standard being phased out in favor of digital formats, RAMDACs have started to disappear from graphics cards.

It is worth noting that most manufacturers include a DVI-I connector, allowing (via simple adapter) standard RGB signal output to an old CRT or LCD monitor with VGA input.

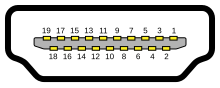

The interface is primarily used to connect a video source to a display device such as a computer monitor, though it can also be used to transmit audio, USB, and other forms of data.

Backward compatibility to VGA and DVI by using adapter dongles enables consumers to use DisplayPort fitted video sources without replacing existing display devices.

[55][56] Chronologically, connection systems between graphics card and motherboard were, mainly: The following table is a comparison between features of some interfaces listed above.