Data compression

[8] LZ methods use a table-based compression model where table entries are substituted for repeated strings of data.

[9] In a further refinement of the direct use of probabilistic modelling, statistical estimates can be coupled to an algorithm called arithmetic coding.

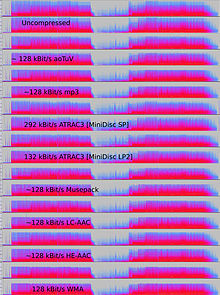

[15] A number of popular compression formats exploit these perceptual differences, including psychoacoustics for sound, and psychovisuals for images and video.

[16][17] DCT is the most widely used lossy compression method, and is used in multimedia formats for images (such as JPEG and HEIF),[18] video (such as MPEG, AVC and HEVC) and audio (such as MP3, AAC and Vorbis).

These areas of study were essentially created by Claude Shannon, who published fundamental papers on the topic in the late 1940s and early 1950s.

A system that predicts the posterior probabilities of a sequence given its entire history can be used for optimal data compression (by using arithmetic coding on the output distribution).

This technique simplifies handling extensive datasets that lack predefined labels and finds widespread use in fields such as image compression.

Some formats are associated with a distinct system, such as Direct Stream Transfer, used in Super Audio CD and Meridian Lossless Packing, used in DVD-Audio, Dolby TrueHD, Blu-ray and HD DVD.

Due to the nature of lossy algorithms, audio quality suffers a digital generation loss when a file is decompressed and recompressed.

This makes lossy compression unsuitable for storing the intermediate results in professional audio engineering applications, such as sound editing and multitrack recording.

The inherent latency of the coding algorithm can be critical; for example, when there is a two-way transmission of data, such as with a telephone conversation, significant delays may seriously degrade the perceived quality.

In algorithms such as MP3, however, a large number of samples have to be analyzed to implement a psychoacoustic model in the frequency domain, and latency is on the order of 23 ms.

[56] Initial concepts for LPC date back to the work of Fumitada Itakura (Nagoya University) and Shuzo Saito (Nippon Telegraph and Telephone) in 1966.

[57] During the 1970s, Bishnu S. Atal and Manfred R. Schroeder at Bell Labs developed a form of LPC called adaptive predictive coding (APC), a perceptual coding algorithm that exploited the masking properties of the human ear, followed in the early 1980s with the code-excited linear prediction (CELP) algorithm which achieved a significant compression ratio for its time.

[63] The world's first commercial broadcast automation audio compression system was developed by Oscar Bonello, an engineering professor at the University of Buenos Aires.

[66] 35 years later, almost all the radio stations in the world were using this technology manufactured by a number of companies because the inventor refused to patent his work, preferring to publish it and leave it in the public domain.

[67] A literature compendium for a large variety of audio coding systems was published in the IEEE's Journal on Selected Areas in Communications (JSAC), in February 1988.

While there were some papers from before that time, this collection documented an entire variety of finished, working audio coders, nearly all of them using perceptual techniques and some kind of frequency analysis and back-end noiseless coding.

[70][71] Most video codecs are used alongside audio compression techniques to store the separate but complementary data streams as one combined package using so-called container formats.

Most video compression formats and codecs exploit both spatial and temporal redundancy (e.g. through difference coding with motion compensation).

This simplifies video editing software, as it prevents a situation in which a compressed frame refers to data that the editor has deleted.

Interest in fractal compression seems to be waning, due to recent theoretical analysis showing a comparative lack of effectiveness of such methods.

If sections of the frame move in a simple manner, the compressor can emit a (slightly longer) command that tells the decompressor to shift, rotate, lighten, or darken the copy.

Commonly during explosions, flames, flocks of animals, and in some panning shots, the high-frequency detail leads to quality decreases or to increases in the variable bitrate.

They mostly rely on the DCT, applied to rectangular blocks of neighboring pixels, and temporal prediction using motion vectors, as well as nowadays also an in-loop filtering step.

In the main lossy processing stage, frequency domain data gets quantized in order to reduce information that is irrelevant to human visual perception.

By computing these filters also inside the encoding loop they can help compression because they can be applied to reference material before it gets used in the prediction process and they can be guided using the original signal.

In 1967, A.H. Robinson and C. Cherry proposed a run-length encoding bandwidth compression scheme for the transmission of analog television signals.

[79] H.264/MPEG-4 AVC was developed in 2003 by a number of organizations, primarily Panasonic, Godo Kaisha IP Bridge and LG Electronics.

AVC is the main video encoding standard for Blu-ray Discs, and is widely used by video sharing websites and streaming internet services such as YouTube, Netflix, Vimeo, and iTunes Store, web software such as Adobe Flash Player and Microsoft Silverlight, and various HDTV broadcasts over terrestrial and satellite television.