Apparent magnitude

Its value depends on its intrinsic luminosity, its distance, and any extinction of the object's light caused by interstellar dust along the line of sight to the observer.

[1] The modern scale was mathematically defined to closely match this historical system by Norman Pogson in 1856.

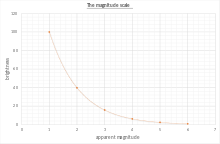

The scale is reverse logarithmic: the brighter an object is, the lower its magnitude number.

The brightest astronomical objects have negative apparent magnitudes: for example, Venus at −4.2 or Sirius at −1.46.

The faintest stars visible with the naked eye on the darkest night have apparent magnitudes of about +6.5, though this varies depending on a person's eyesight and with altitude and atmospheric conditions.

Absolute magnitude is a related quantity which measures the luminosity that a celestial object emits, rather than its apparent brightness when observed, and is expressed on the same reverse logarithmic scale.

Therefore, it is of greater use in stellar astrophysics since it refers to a property of a star regardless of how close it is to Earth.

This rather crude scale for the brightness of stars was popularized by Ptolemy in his Almagest and is generally believed to have originated with Hipparchus.

[9] The 1884 Harvard Photometry and 1886 Potsdamer Duchmusterung star catalogs popularized Pogson's ratio, and eventually it became a de facto standard in modern astronomy to describe differences in brightness.

The Harvard Photometry used an average of 100 stars close to Polaris to define magnitude 5.0.

[12] Later, the Johnson UVB photometric system defined multiple types of photometric measurements with different filters, where magnitude 0.0 for each filter is defined to be the average of six stars with the same spectral type as Vega.

The most widely used is the AB magnitude system,[15] in which photometric zero points are based on a hypothetical reference spectrum having constant flux per unit frequency interval, rather than using a stellar spectrum or blackbody curve as the reference.

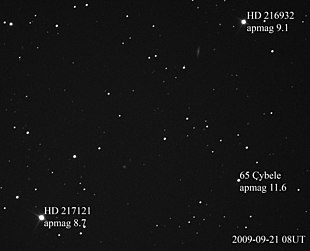

Precision measurement of magnitude (photometry) requires calibration of the photographic or (usually) electronic detection apparatus.

This generally involves contemporaneous observation, under identical conditions, of standard stars whose magnitude using that spectral filter is accurately known.

Moreover, as the amount of light actually received by a telescope is reduced due to transmission through the Earth's atmosphere, the airmasses of the target and calibration stars must be taken into account.

Calibrator stars close in the sky to the target are favoured (to avoid large differences in the atmospheric paths).

Such calibration obtains the brightness as would be observed from above the atmosphere, where apparent magnitude is defined.

[citation needed] The apparent magnitude scale in astronomy reflects the received power of stars and not their amplitude.

Newcomers should consider using the relative brightness measure in astrophotography to adjust exposure times between stars.

For example, directly scaling the exposure time from the Moon to the Sun works because they are approximately the same size in the sky.

Inverting the above formula, a magnitude difference m1 − m2 = Δm implies a brightness factor of

For example, photometry on closely separated double stars may only be able to produce a measurement of their combined light output.

While magnitude generally refers to a measurement in a particular filter band corresponding to some range of wavelengths, the apparent or absolute bolometric magnitude (mbol) is a measure of an object's apparent or absolute brightness integrated over all wavelengths of the electromagnetic spectrum (also known as the object's irradiance or power, respectively).

In contrast, the intrinsic brightness of an astronomical object, does not depend on the distance of the observer or any extinction.

[20][21][22] In the case of a planet or asteroid, the absolute magnitude H rather means the apparent magnitude it would have if it were 1 astronomical unit (150,000,000 km) from both the observer and the Sun, and fully illuminated at maximum opposition (a configuration that is only theoretically achievable, with the observer situated on the surface of the Sun).

The V band was chosen for spectral purposes and gives magnitudes closely corresponding to those seen by the human eye.

Indeed, some L and T class stars have an estimated magnitude of well over 100, because they emit extremely little visible light, but are strongest in infrared.

On early 20th century and older orthochromatic (blue-sensitive) photographic film, the relative brightnesses of the blue supergiant Rigel and the red supergiant Betelgeuse irregular variable star (at maximum) are reversed compared to what human eyes perceive, because this archaic film is more sensitive to blue light than it is to red light.

[29][30] For planets and other Solar System bodies, the apparent magnitude is derived from its phase curve and the distances to the Sun and observer.

Telescope sensitivity depends on observing time, optical bandpass, and interfering light from scattering and airglow.