Word list

Frequency lists are also made for lexicographical purposes, serving as a sort of checklist to ensure that common words are not left out.

While word counting is a thousand years old, with still gigantic analysis done by hand in the mid-20th century, natural language electronic processing of large corpora such as movie subtitles (SUBTLEX megastudy) has accelerated the research field.

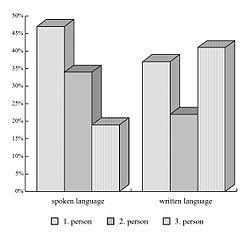

He cited several key issues which influence the construction of frequency lists: Most of currently available studies are based on written text corpus, more easily available and easy to process.

The initial research saw a handful of follow-up studies,[1] providing valuable frequency count analysis for various languages.

It seems that Zipf's law holds for frequency lists drawn from longer texts of any natural language.

Frequency lists are a useful tool when building an electronic dictionary, which is a prerequisite for a wide range of applications in computational linguistics.

Those lists are not intended to be given directly to students, but rather to serve as a guideline for teachers and textbook authors (Nation 1997).

Memorization is positively affected by higher word frequency, likely because the learner is subject to more exposures (Laufer 1997).

Word counting is an ancient field,[5] with known discussion back to Hellenistic time.

In 1944, Edward Thorndike, Irvin Lorge and colleagues[6] hand-counted 18,000,000 running words to provide the first large-scale English language frequency list, before modern computers made such projects far easier (Nation 1997).

In particular, words relating to technology, such as "blog," which, in 2014, was #7665 in frequency[7] in the Corpus of Contemporary American English,[8] was first attested to in 1999,[9][10][11] and does not appear in any of these three lists.

The size of its source corpus increased its usefulness, but its age, and language changes, have reduced its applicability (Nation 1997).

A corpus of 5 million running words, from written texts used in United States schools (various grades, various subject areas).

[18] More recently, the project Lexique3 provides 142,000 French words, with orthography, phonetic, syllabation, part of speech, gender, number of occurrence in the source corpus, frequency rank, associated lexemes, etc., available under an open license CC-by-sa-4.0.

Following the SUBTLEX movement, Cai & Brysbaert 2010 recently made a rich study of Chinese word and character frequencies.