Calibration

"[1] This definition states that the calibration process is purely a comparison, but introduces the concept of measurement uncertainty in relating the accuracies of the device under test and the standard.

The NMI supports the metrological infrastructure in that country (and often others) by establishing an unbroken chain, from the top level of standards to an instrument used for measurement.

Examples of National Metrology Institutes are NPL in the UK, NIST in the United States, PTB in Germany and many others.

This may be done by national standards laboratories operated by the government or by private firms offering metrology services.

[5] Together, these standards cover instruments that measure various physical quantities such as electromagnetic radiation (RF probes), sound (sound level meter or noise dosimeter), time and frequency (intervalometer), ionizing radiation (Geiger counter), light (light meter), mechanical quantities (limit switch, pressure gauge, pressure switch), and, thermodynamic or thermal properties (thermometer, temperature controller).

In other words, the design has to be capable of measurements that are "within engineering tolerance" when used within the stated environmental conditions over some reasonable period of time.

Basically, the purpose of calibration is for maintaining the quality of measurement as well as to ensure the proper working of particular instrument.

The using organization generally assigns the actual calibration interval, which is dependent on this specific measuring equipment's likely usage level.

The standards themselves are not clear on recommended CI values:[7] The next step is defining the calibration process.

[10] This ratio was probably first formalized in Handbook 52 that accompanied MIL-STD-45662A, an early US Department of Defense metrology program specification.

Another common method for dealing with this capability mismatch is to reduce the accuracy of the device being calibrated.

Changing the acceptable range to 97 to 103 units would remove the potential contribution of all of the standards and preserve a 3.3:1 ratio.

Depending on the device, a zero unit state, the absence of the phenomenon being measured, may also be a calibration point.

For example, in electronic calibrations involving analog phenomena, the impedance of the cable connections can directly influence the result.

The gauge under test may be adjusted to ensure its zero point and response to pressure comply as closely as possible to the intended accuracy.

There are clearinghouses for calibration procedures such as the Government-Industry Data Exchange Program (GIDEP) in the United States.

Some organizations such as nuclear power plants collect "as-found" calibration data before any routine maintenance is performed.

The cost for ordinary equipment support is generally about 10% of the original purchase price on a yearly basis, as a commonly accepted rule-of-thumb.

But, depending on the organization, the majority of the devices that need calibration can have several ranges and many functionalities in a single instrument.

Some of the earliest known systems of measurement and calibration seem to have been created between the ancient civilizations of Egypt, Mesopotamia and the Indus Valley, with excavations revealing the use of angular gradations for construction.

[15] The term "calibration" was likely first associated with the precise division of linear distance and angles using a dividing engine and the measurement of gravitational mass using a weighing scale.

These two forms of measurement alone and their direct derivatives supported nearly all commerce and technology development from the earliest civilizations until about AD 1800.

At the beginning of the twelfth century, during the reign of Henry I (1100-1135), it was decreed that a yard be "the distance from the tip of the King's nose to the end of his outstretched thumb.

[18] Other standardization attempts followed, such as the Magna Carta (1225) for liquid measures, until the Mètre des Archives from France and the establishment of the Metric system.

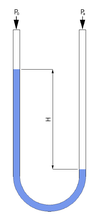

[20] An example is in high pressure (up to 50 psi) steam engines, where mercury was used to reduce the scale length to about 60 inches, but such a manometer was expensive and prone to damage.