Image stabilization

In astronomy, the problem of lens shake is added to variation in the atmosphere, which changes the apparent positions of objects over time.

Image stabilization is only designed for and capable of reducing blur that results from normal, minute shaking of a lens due to hand-held shooting.

The Pentax K-5 and K-r, when equipped with the O-GPS1 GPS accessory for position data, can use their sensor-shift capability to reduce the resulting star trails.

This technology is implemented in the lens itself, as distinct from in-body image stabilization (IBIS), which operates by moving the sensor as the final element in the optical path.

For handheld video recording, regardless of lighting conditions, optical image stabilization compensates for minor shakes whose appearance magnifies when watched on a large display such as a television set or computer monitor.

[6][7][8] Different companies have different names for the OIS technology, for example: Most high-end smartphones as of late 2014 use optical image stabilization for photos and videos.

[18] This is because active mode is optimized for reducing higher angular velocity movements (typically when shooting from a heavily moving platform using faster shutter speeds), where normal mode tries to reduce lower angular velocity movements over a larger amplitude and timeframe (typically body and hand movement when standing on a stationary or slowly moving platform while using slower shutter speeds).

Many modern image stabilization lenses (notably Canon's more recent IS lenses) are able to auto-detect that they are tripod-mounted (as a result of extremely low vibration readings) and disable IS automatically to prevent this and any consequent image quality reduction.

While the most obvious advantage for image stabilization lies with longer focal lengths, even normal and wide-angle lenses benefit from it in low-light applications.

This is not an issue for Mirrorless interchangeable-lens camera systems, because the sensor output to the screen or electronic viewfinder is stabilized.

When the camera rotates, causing angular error, gyroscopes encode information to the actuator that moves the sensor.

Minolta and Konica Minolta used a technique called Anti-Shake (AS) now marketed as SteadyShot (SS) in the Sony α line and Shake Reduction (SR) in the Pentax K-series and Q series cameras, which relies on a very precise angular rate sensor to detect camera motion.

[21] Olympus introduced image stabilization with their E-510 D-SLR body, employing a system built around their Supersonic Wave Drive.

[22] Other manufacturers use digital signal processors (DSP) to analyze the image on the fly and then move the sensor appropriately.

Some sensor-based image stabilization implementations are capable of correcting camera roll rotation, a motion that is easily excited by pressing the shutter button.

Both the speed and range of the required sensor movement increase with the focal length of the lens being used, making sensor-shift technology less suited for very long telephoto lenses, especially when using slower shutter speeds, because the available motion range of the sensor quickly becomes insufficient to cope with the increasing image displacement.

Therefore, depending on the angle of view, the maximum exposure time should not exceed 1⁄3 second for long telephoto shots (with a 35 mm equivalent focal length of 800 millimeters) and a little more than ten seconds for wide angle shots (with a 35 mm equivalent focal length of 24 millimeters), if the movement of the Earth is not taken into consideration by the image stabilization process.

In this case, only the independent compensation degrees of the in-built image sensor stabilization are activated to support lens stabilisation.

[27] Canon and Nikon now have full-frame mirrorless bodies that have IBIS and also support each company's lens-based stabilization.

Canon's first two such bodies, the EOS R and RP, do not have IBIS, but the feature was added for the more recent higher end R3, R5, R6 (and its MkII version) and the APS-C R7.

This technique shifts the cropped area read out from the image sensor for each frame to counteract the motion.

Others now also use digital signal processing (DSP) to reduce blur in stills, for example by sub-dividing the exposure into several shorter exposures in rapid succession, discarding blurred ones, re-aligning the sharpest sub-exposures and adding them together, and using the gyroscope to detect the best time to take each frame.

[35] Online services, including YouTube, are also beginning to provide 'video stabilization as a post-processing step after content is uploaded.

This has the disadvantage of not having access to the realtime gyroscopic data, but the advantage of more computing power and the ability to analyze images both before and after a particular frame.

Moving, rather than tilting, the camera up/down or left/right by a fraction of a millimeter becomes noticeable if you are trying to resolve millimeter-size details on the object.

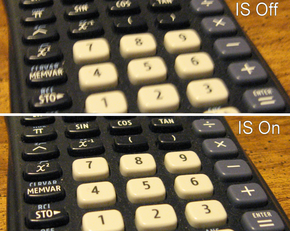

- unstabilised

- lens-based optical stabilisation

- sensor-shift optical stabilisation

- digital or electronic stabilisation