Software quality

Other aspects, such as reliability, might involve not only the software but also the underlying hardware, therefore, it can be assessed both statically and dynamically (stress test).

[citation needed] Historically, the structure, classification, and terminology of attributes and metrics applicable to software quality management have been derived or extracted from the ISO 9126 and the subsequent ISO/IEC 25000 standard.

An aggregated measure of software quality can be computed through a qualitative or a quantitative scoring scheme or a mix of both and then a weighting system reflecting the priorities.

This view of software quality being positioned on a linear continuum is supplemented by the analysis of "critical programming errors" that under specific circumstances can lead to catastrophic outages or performance degradations that make a given system unsuitable for use regardless of rating based on aggregated measurements.

This is not easy, and as soon as one feels fairly successful in the endeavor, he finds that the needs of the consumer have changed, competitors have moved in, etc.

It is based on the customer's actual experience with the product or service, measured against his or her requirements -- stated or unstated, conscious or merely sensed, technically operational or entirely subjective -- and always representing a moving target in a competitive market.

It does over-lap with before mentioned areas (see also PMI definitions), but is distinctive as it does not solely focus on testing but also on processes, management, improvements, assessments, etc.

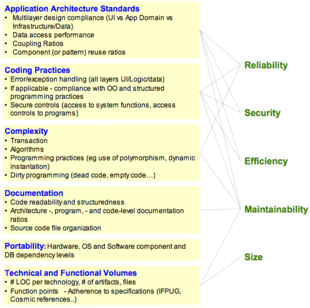

Subcategories have been created to handle specific areas like business application architecture and technical characteristics such as data access and manipulation or the notion of transactions.

The analysis can be performed using a qualitative or quantitative approach or a mix of both to provide an aggregate view [using for example weighted average(s) that reflect relative importance between the factors being measured].

This view of software quality on a linear continuum has to be supplemented by the identification of discrete Critical Programming Errors.

These vulnerabilities may not fail a test case, but they are the result of bad practices that under specific circumstances can lead to catastrophic outages, performance degradations, security breaches, corrupted data, and myriad other problems[66] that make a given system de facto unsuitable for use regardless of its rating based on aggregated measurements.

This is distinct from the basic, local, component-level code analysis typically performed by development tools which are mostly concerned with implementation considerations and are crucial during debugging and testing activities.

Assessing reliability requires checks of at least the following software engineering best practices and technical attributes: Depending on the application architecture and the third-party components used (such as external libraries or frameworks), custom checks should be defined along the lines drawn by the above list of best practices to ensure a better assessment of the reliability of the delivered software.

As with Reliability, the causes of performance inefficiency are often found in violations of good architectural and coding practice which can be detected by measuring the static quality attributes of an application.

These static attributes predict potential operational performance bottlenecks and future scalability problems, especially for applications requiring high execution speed for handling complex algorithms or huge volumes of data.

[70] Many security vulnerabilities result from poor coding and architectural practices such as SQL injection or cross-site scripting.

[69] Assessing security requires at least checking the following software engineering best practices and technical attributes: Maintainability includes concepts of modularity, understandability, changeability, testability, reusability, and transferability from one development team to another.

It can be applied early in the software development life-cycle and it is not dependent on lines of code like the somewhat inaccurate Backfiring method.

One common limitation to the Function Point methodology is that it is a manual process and therefore it can be labor-intensive and costly in large scale initiatives such as application development or outsourcing engagements.

This negative aspect of applying the methodology may be what motivated industry IT leaders to form the Consortium for IT Software Quality focused on introducing a computable metrics standard for automating the measuring of software size while the IFPUG keep promoting a manual approach as most of its activity rely on FP counters certifications.

Critical Programming Errors are specific architectural and/or coding bad practices that result in the highest, immediate or long term, business disruption risk.