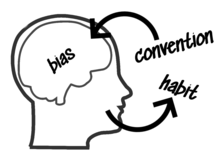

Cognitive bias

[5] Furthermore, allowing cognitive biases enables faster decisions which can be desirable when timeliness is more valuable than accuracy, as illustrated in heuristics.

[7][8] Research suggests that cognitive biases can make individuals more inclined to endorsing pseudoscientific beliefs by requiring less evidence for claims that confirm their preconceptions.

The study of cognitive biases has practical implications for areas including clinical judgment, entrepreneurship, finance, and management.

[10][11] The notion of cognitive biases was introduced by Amos Tversky and Daniel Kahneman in 1972[12] and grew out of their experience of people's innumeracy, or inability to reason intuitively with the greater orders of magnitude.

Tversky, Kahneman, and colleagues demonstrated several replicable ways in which human judgments and decisions differ from rational choice theory.

"[6] For example, the representativeness heuristic is defined as "The tendency to judge the frequency or likelihood" of an occurrence by the extent of which the event "resembles the typical case.

Critics of Kahneman and Tversky, such as Gerd Gigerenzer, alternatively argued that heuristics should not lead us to conceive of human thinking as riddled with irrational cognitive biases.

[15] Nevertheless, experiments such as the "Linda problem" grew into heuristics and biases research programs, which spread beyond academic psychology into other disciplines including medicine and political science.

[23][24] The following is a list of the more commonly studied cognitive biases: Many social institutions rely on individuals to make rational judgments.

For instance, bias is a wide spread and well studied phenomenon because most decisions that concern the minds and hearts of entrepreneurs are computationally intractable.

[36] They found that the participants who ate more of the unhealthy snack food, tended to have less inhibitory control and more reliance on approach bias.

[39] Some believe that there are people in authority who use cognitive biases and heuristics in order to manipulate others so that they can reach their end goals.

A systematic review showed evidence that cognitive biases, such as source confusion, gist memory, and repetition effects, can contribute to miscitations in academic literature, leading to high rates of citation inaccuracies.

This example demonstrates how a cognitive bias, typically seen as a hindrance, can enhance collective decision-making by encouraging a wider exploration of possibilities.

[42] Cognitive biases are interlinked with collective illusions, a phenomenon where a group of people mistakenly believe that their views and preferences are shared by the majority, when in reality, they are not.

These illusions often arise from various cognitive biases that misrepresent our perception of social norms and influence how we assess the beliefs of others.

[43] Because they cause systematic errors, cognitive biases cannot be compensated for using a wisdom of the crowd technique of averaging answers from several people.

Reference class forecasting is a method for systematically debiasing estimates and decisions, based on what Daniel Kahneman has dubbed the outside view.

Carey K. Morewedge and colleagues (2015) found that research participants exposed to one-shot training interventions, such as educational videos and debiasing games that taught mitigating strategies, exhibited significant reductions in their commission of six cognitive biases immediately and up to 3 months later.

Those with higher CRT scores tend to be able to answer more correctly on different heuristic and cognitive bias tests and tasks.

Advances in economics and cognitive neuroscience now suggest that many behaviors previously labeled as biases might instead represent optimal decision-making strategies.