Color quantization

Almost any three-dimensional clustering algorithm can be applied to color quantization, and vice versa.

A more modern popular method is clustering using octrees, first conceived by Gervautz and Purgathofer and improved by Xerox PARC researcher Dan Bloomberg.

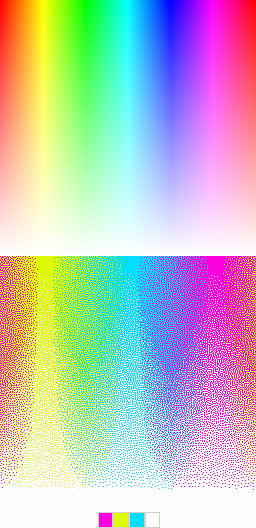

Some modern schemes for color quantization attempt to combine palette selection with dithering in one stage, rather than perform them independently.

The Local K-means algorithm, conceived by Oleg Verevka in 1995, is designed for use in windowing systems where a core set of "reserved colors" is fixed for use by the system and many images with different color schemes might be displayed simultaneously.

It is a post-clustering scheme that makes an initial guess at the palette and then iteratively refines it.

In the early days of color quantization, the k-means clustering algorithm was deemed unsuitable because of its high computational requirements and sensitivity to initialization.

[2] He demonstrated that an efficient implementation of k-means outperforms a large number of color quantization methods.

Finally, one of the newer methods is spatial color quantization, conceived by Puzicha, Held, Ketterer, Buhmann, and Fellner of the University of Bonn, which combines dithering with palette generation and a simplified model of human perception to produce visually impressive results even for very small numbers of colors.

Modern computers can now display millions of colors at once, far more than can be distinguished by the human eye, limiting this application primarily to mobile devices and legacy hardware.

GIF, for a long time the most popular lossless and animated bitmap format on the World Wide Web, only supports up to 256 colors, necessitating quantization for many images.

PNG images support 24-bit color, but can often be made much smaller in filesize without much visual degradation by application of color quantization, since PNG files use fewer bits per pixel for palettized images.

The infinite number of colors available through the lens of a camera is impossible to display on a computer screen; thus converting any photograph to a digital representation necessarily involves some quantization.

With the few colors available on early computers, different quantization algorithms produced very different-looking output images.

As a result, a lot of time was spent on writing sophisticated algorithms to be more lifelike.

Some vector graphics editors also utilize color quantization, especially for raster-to-vector techniques that create tracings of bitmap images with the help of edge detection.