Theory of computation

Some pioneers of the theory of computation were Ramon Llull, Alonzo Church, Kurt Gödel, Alan Turing, Stephen Kleene, Rózsa Péter, John von Neumann and Claude Shannon.

The statement that the halting problem cannot be solved by a Turing machine[7] is one of the most important results in computability theory, as it is an example of a concrete problem that is both easy to formulate and impossible to solve using a Turing machine.

Another important step in computability theory was Rice's theorem, which states that for all non-trivial properties of partial functions, it is undecidable whether a Turing machine computes a partial function with that property.

[8] Computability theory is closely related to the branch of mathematical logic called recursion theory, which removes the restriction of studying only models of computation which are reducible to the Turing model.

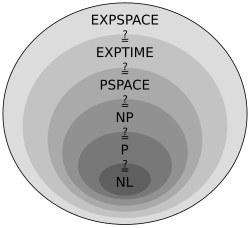

In order to analyze how much time and space a given algorithm requires, computer scientists express the time or space required to solve the problem as a function of the size of the input problem.

To simplify this problem, computer scientists have adopted big O notation, which allows functions to be compared in a way that ensures that particular aspects of a machine's construction do not need to be considered, but rather only the asymptotic behavior as problems become large.

The Official Problem Description was given by Turing Award winner Stephen Cook.

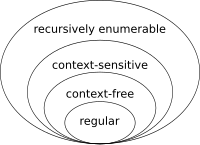

Regular expressions, for example, specify string patterns in many contexts, from office productivity software to programming languages.

Another formalism mathematically equivalent to regular expressions, finite automata are used in circuit design and in some kinds of problem-solving.

Non-deterministic pushdown automata are another formalism equivalent to context-free grammars.