Confirmation bias

Another proposal is that people show confirmation bias because they are pragmatically assessing the costs of being wrong rather than investigating in a neutral, scientific way.

Flawed decisions due to confirmation bias have been found in a wide range of political, organizational, financial and scientific contexts.

For example, confirmation bias produces systematic errors in scientific research based on inductive reasoning (the gradual accumulation of supportive evidence).

In social media, confirmation bias is amplified by the use of filter bubbles, or "algorithmic editing", which display to individuals only information they are likely to agree with, while excluding opposing views.

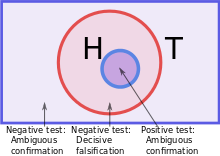

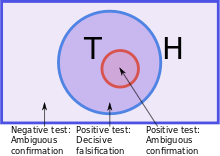

[8][9] Experiments have found repeatedly that people tend to test hypotheses in a one-sided way, by searching for evidence consistent with their current hypothesis.

[18] Similar studies have demonstrated how people engage in a biased search for information, but also that this phenomenon may be limited by a preference for genuine diagnostic tests.

In the Novum Organum, English philosopher and scientist Francis Bacon (1561–1626)[50] noted that biased assessment of evidence drove "all superstitions, whether in astrology, dreams, omens, divine judgments or the like".

]In the second volume of his The World as Will and Representation (1844), German philosopher Arthur Schopenhauer observed that "An adopted hypothesis gives us lynx-eyes for everything that confirms it and makes us blind to everything that contradicts it.

In his essay (1894) The Kingdom of God Is Within You, Tolstoy had earlier written:[54] The most difficult subjects can be explained to the most slow-witted man if he has not formed any idea of them already; but the simplest thing cannot be made clear to the most intelligent man if he is firmly persuaded that he knows already, without a shadow of doubt, what is laid before him.In Peter Wason's initial experiment published in 1960 (which does not mention the term "confirmation bias"), he repeatedly challenged participants to identify a rule applying to triples of numbers.

[60][61] Klayman and Ha's 1987 paper argues that the Wason experiments do not actually demonstrate a bias towards confirmation, but instead a tendency to make tests consistent with the working hypothesis.

[67] Cognitive explanations for confirmation bias are based on limitations in people's ability to handle complex tasks, and the shortcuts, called heuristics, that they use.

[3]: 198 Explanations in terms of cost-benefit analysis assume that people do not just test hypotheses in a disinterested way, but assess the costs of different errors.

[74] Using ideas from evolutionary psychology, James Friedrich suggests that people do not primarily aim at truth in testing hypotheses, but try to avoid the most costly errors.

However, if the external parties are overly aggressive or critical, people will disengage from thought altogether, and simply assert their personal opinions without justification.

Lerner and Tetlock say that people only push themselves to think critically and logically when they know in advance they will need to explain themselves to others who are well-informed, genuinely interested in the truth, and whose views they do not already know.

Economist Weijie Zhong has developed a model demonstrating that individuals who must make decisions under time pressure, and who face costs for obtaining more information, will often prefer confirmatory signals.

According to this model, when individuals believe strongly in a certain hypothesis, they optimally seek information that confirms it, allowing them to build confidence more efficiently.

[83] In social media, confirmation bias is amplified by the use of filter bubbles, or "algorithmic editing", which displays to individuals only information they are likely to agree with, while excluding opposing views.

[84] Some have argued that confirmation bias is the reason why society can never escape from filter bubbles, because individuals are psychologically hardwired to seek information that agrees with their preexisting values and beliefs.

Confirmation bias (selecting or reinterpreting evidence to support one's beliefs) is one of three main hurdles cited as to why critical thinking goes astray in these circumstances.

[115] Nickerson argues that reasoning in judicial and political contexts is sometimes subconsciously biased, favoring conclusions that judges, juries or governments have already committed to.

[3]: 191–193 Since the evidence in a jury trial can be complex, and jurors often reach decisions about the verdict early on, it is reasonable to expect an attitude polarization effect.

[118] Confirmation bias can be a factor in creating or extending conflicts, from emotionally charged debates to wars: by interpreting the evidence in their favor, each opposing party can become overconfident that it is in the stronger position.

For example, psychologists Stuart Sutherland and Thomas Kida have each argued that U.S. Navy Admiral Husband E. Kimmel showed confirmation bias when playing down the first signs of the Japanese attack on Pearl Harbor.

[133] As a striking illustration of confirmation bias in the real world, Nickerson mentions numerological pyramidology: the practice of finding meaning in the proportions of the Egyptian pyramids.

[136] A less abstract study was the Stanford biased interpretation experiment, in which participants with strong opinions about the death penalty read about mixed experimental evidence.

[30][135][137] Based on these experiments, Deanna Kuhn and Joseph Lao concluded that polarization is a real phenomenon but far from inevitable, only happening in a small minority of cases, and it was prompted not only by considering mixed evidence, but by merely thinking about the topic.

[135] Charles Taber and Milton Lodge argued that the Stanford team's result had been hard to replicate because the arguments used in later experiments were too abstract or confusing to evoke an emotional response.

[145] The term belief perseverance, however, was coined in a series of experiments using what is called the "debriefing paradigm": participants read fake evidence for a hypothesis, their attitude change is measured, then the fakery is exposed in detail.

For example, people form a more positive impression of someone described as "intelligent, industrious, impulsive, critical, stubborn, envious" than when they are given the same words in reverse order.