Data synchronization

ETL (extraction transformation loading) tools can be helpful at this stage for managing data format complexities.

This shows the need of a real-time system, which is being updated as well to enable smooth manufacturing process in real-time, e.g., ordering material when enterprise is running out stock, synchronizing customer orders with manufacturing process, etc.

From real life, there exist so many examples where real-time processing gives successful and competitive advantage.

Even though the security is maintained correctly in the source system which captures the data, the security and information access privileges must be enforced on the target systems as well to prevent any potential misuse of the information.

There are five different phases involved in the data synchronization process: Each of these steps is critical.

In case of large amounts of data, the synchronization process needs to be carefully planned and executed to avoid any negative impact on performance.

Typically, it is assumed that these strings differ by up to a fixed number of edits (i.e. character insertions, deletions, or modifications).

[7] In fault-tolerant systems, distributed databases must be able to cope with the loss or corruption of (part of) their data.

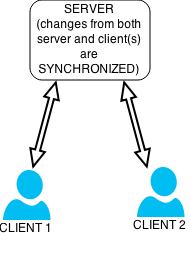

The first step is usually replication, which involves making multiple copies of the data and keeping them all up to date as changes are made.

The simplest approach is to have a single master instance that is the sole source of truth.

Paxos and Raft are more complex protocols that exist to solve problems with transient effects during failover, such as two instances thinking they are the master at the same time.

If corrupt or out-of-date data may be present on some nodes, this approach may also benefit from the use of an error correction code.

DHTs and Blockchains try to solve the problem of synchronization between many nodes (hundreds to billions).