Dual process theory (moral psychology)

Initially proposed by Joshua Greene along with Brian Sommerville, Leigh Nystrom, John Darley, Jonathan David Cohen and others,[1][2][3] the theory can be seen as a domain-specific example of more general dual process accounts in psychology, such as Daniel Kahneman's "system1"/"system 2" distinction popularised in his book, Thinking, Fast and Slow.

The original fMRI investigation[1] proposing the dual process account has been cited in excess of 2000 scholarly articles, generating extensive use of similar methodology as well as criticism.

[6] He calls this the Central Tension Problem: Moral judgments that can be characterised as deontological are preferentially supported by automatic-emotional processes and intuitions.

In "manual mode", judgments draw from both general knowledge about "how the world works" and explicit understanding of special situational features.

While a photographer can switch back-and-forth between automatic and manual mode, the automatic-intuitive processes of human reasoning are always active: conscious deliberations needs to "override" our intuitions.

This further implies that deontological responders will not experience any conflict from the "utilitarian pull" of the dilemma: they have not engaged in the processing that gives rise to these considerations in the first place.

[15] Greene uses fMRI to evaluate the brain activities and responses of people confronted with different variants of the famous Trolley problem in ethics.

It was observed that when responding to personal dilemmas, the subjects displayed increased activity in regions of the brain associated with emotion (the medial Prefrontal cortex, the posterior Cingulate cortex/Precuneus, the posterior Superior temporal sulcus/Inferior parietal lobule and the Amygdala), while when they responded to impersonal dilemmas, they displayed increased activity in regions of the brain associated with working memory (the Dorsolateral prefrontal cortex and the Parietal lobe).

Greene points to a large body of evidence from cognitive science suggesting that inclination to deontological or consequentialist judgment depends on whether emotional-intuitive reactions or more calculated ones were involved in the judgment-making process.

Further evidence shows that consequentialist responses to trolley-problem-like dilemmas are associated with deficits in emotional awareness in people with alexithymia or psychopathic tendencies.

[23][24] Neuropsychological evidence from lesion studies focusing on patients with damage to the ventromedial prefrontal cortex also points to a possible dissociation between emotional and rational decision processes.

On the 13th of September 1848, while working on a railway track in Vermont, he was involved in an accident: an "iron rod used to cram down the explosive powder shot into Gage's cheek, went through the front of his brain, and exited via the top of his head".

"[29] Another critical piece of evidence supporting the dual process account comes from reaction time data associated with moral dilemma experiments.

[31] Cognitive load, in general, is also found to increase the likelihood of "deontological" judgment[32] These laboratory findings are supplemented by work that looks at the decision-making processes of real-world altruists in life-or-death situations.

For instance, he mentions the "common or natural cause of our passions" and the generation of love for others represented through self-sacrifice for the greater good of the group.

Although patients with this damage display characteristically "cold-blooded" behaviour in the trolley problem, they show more likelihood of endorsement of emotionally laden choices in the Ultimatum Game.

[38] Kahane and Shackel scrutinize the questions and dilemmas Greene et al. use, and claim that the methodology used in the neuroscientific study of intuitions needs to be improved.

Although this thought experiment involves personal harm, the philosopher Francis Kamm arrives at an intuitive consequentialist judgement, thinking it is permissible to kill one to save five.

Guzmán, Barbato, Sznycer, and Cosmides pointed out that moral dilemmas were a recurrent adaptive problem for ancestral humans, whose social life created multiple responsibilities to others (siblings, parents and offspring, cooperative partners, coalitional allies, and so on).

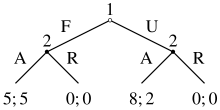

According to Greene and colleagues, people experience the footbridge problem as a dilemma because "two [dissociable psychological] processes yield different answers to the same question".

However, if the siblings take extreme precautions, such as vasectomy, in order to avoid the risk of genetic mutation in their offspring, the cause of the moral intuition is no longer relevant.

[6] The appropriateness of applying our intuitive and automatic mode of reasoning to a given moral problem thus hinges on how the process was formed in the first place.

Several philosophers have written critical responses, mainly criticising the necessary linking between process, automatic or controlled, intuitive or counterintuitive/rational, with containt, respectively, deontological or utilitarian.

[54][irrelevant citation] Robert Wright has called Joshua Greene's proposal for global harmony ambitious adding, "I like ambition!

As a matter of fact, Greene's research[2] itself shows that consequentialist responses to personal moral dilemmas involve at least one brain region - the posterior cingulate- that is associated with emotional processes.

Hence, in the moral domain, where these notions are highly disputed, "it is question begging to assume that the emotional processes underwriting deontological intuitions consist in heuristics".

Additionally, he argues that, as far as we know, consequentialist judgements may also rely on heuristics, given that it is highly unlikely that they could always be the product of accurate and comprehensive mental calculations of all the possible outcomes.

Many philosophers appeal to what is colloquially known as the yuck factor, or the belief that a widespread common negative intuition towards something is evidence that there is something morally wrong about it.

He lists examples of the various unpalatable consequences of cloning and appeals to notions of human nature and dignity to show that our disgust is the emotional expression of deep wisdom that is not fully articulable.

Increasing an agent's empathy by artificially raising oxytocin levels will likely be ineffective in improving their overall moral agency, because such a disposition relies heavily on psychological, social and situational contexts, as well as their deeply held convictions and beliefs.