Entropy

Collective intelligence Collective action Self-organized criticality Herd mentality Phase transition Agent-based modelling Synchronization Ant colony optimization Particle swarm optimization Swarm behaviour Social network analysis Small-world networks Centrality Motifs Graph theory Scaling Robustness Systems biology Dynamic networks Evolutionary computation Genetic algorithms Genetic programming Artificial life Machine learning Evolutionary developmental biology Artificial intelligence Evolutionary robotics Reaction–diffusion systems Partial differential equations Dissipative structures Percolation Cellular automata Spatial ecology Self-replication Conversation theory Entropy Feedback Goal-oriented Homeostasis Information theory Operationalization Second-order cybernetics Self-reference System dynamics Systems science Systems thinking Sensemaking Variety Ordinary differential equations Phase space Attractors Population dynamics Chaos Multistability Bifurcation Rational choice theory Bounded rationality Entropy is a scientific concept that is most commonly associated with a state of disorder, randomness, or uncertainty.

The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the microscopic description of nature in statistical physics, and to the principles of information theory.

[2] In 1865, German physicist Rudolf Clausius, one of the leading founders of the field of thermodynamics, defined it as the quotient of an infinitesimal amount of heat to the instantaneous temperature.

In his 1803 paper Fundamental Principles of Equilibrium and Movement, the French mathematician Lazare Carnot proposed that in any machine, the accelerations and shocks of the moving parts represent losses of moment of activity; in any natural process there exists an inherent tendency towards the dissipation of useful energy.

[6] He described his observations as a dissipative use of energy, resulting in a transformation-content (Verwandlungsinhalt in German), of a thermodynamic system or working body of chemical species during a change of state.

Later, scientists such as Ludwig Boltzmann, Josiah Willard Gibbs, and James Clerk Maxwell gave entropy a statistical basis.

[10] Any method involving the notion of entropy, the very existence of which depends on the second law of thermodynamics, will doubtless seem to many far-fetched, and may repel beginners as obscure and difficult of comprehension.

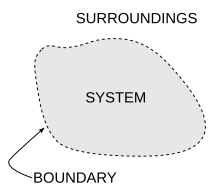

The concept of entropy is described by two principal approaches, the macroscopic perspective of classical thermodynamics, and the microscopic description central to statistical mechanics.

The classical approach defines entropy in terms of macroscopically measurable physical properties, such as bulk mass, volume, pressure, and temperature.

The two approaches form a consistent, unified view of the same phenomenon as expressed in the second law of thermodynamics, which has found universal applicability to physical processes.

A system composed of a pure substance of a single phase at a particular uniform temperature and pressure is determined, and is thus a particular state, and has a particular volume.

Additionally, descriptions of devices operating near the limit of de Broglie waves, e.g. photovoltaic cells, have to be consistent with quantum statistics.

The interpretation of entropy in statistical mechanics is the measure of uncertainty, disorder, or mixedupness in the phrase of Gibbs, which remains about a system after its observable macroscopic properties, such as temperature, pressure and volume, have been taken into account.

For a given set of macroscopic variables, the entropy measures the degree to which the probability of the system is spread out over different possible microstates.

In contrast to the macrostate, which characterizes plainly observable average quantities, a microstate specifies all molecular details about the system including the position and momentum of every molecule.

Density matrix formalism is not required if the system occurs to be in a thermal equilibrium so long as the basis states are chosen to be eigenstates of Hamiltonian.

In an isolated system such as the room and ice water taken together, the dispersal of energy from warmer to cooler always results in a net increase in entropy.

Thus, when the "universe" of the room and ice water system has reached a temperature equilibrium, the entropy change from the initial state is at a maximum.

These proofs are based on the probability density of microstates of the generalised Boltzmann distribution and the identification of the thermodynamic internal energy as the ensemble average

The second law of thermodynamics states that entropy in an isolated system — the combination of a subsystem under study and its surroundings — increases during all spontaneous chemical and physical processes.

is the rate of entropy generation within the system, e.g. by chemical reactions, phase transitions, internal heat transfer or frictional effects such as viscosity.

These equations also apply for expansion into a finite vacuum or a throttling process, where the temperature, internal energy and enthalpy for an ideal gas remain constant.

In this direction, several recent authors have derived exact entropy formulas to account for and measure disorder and order in atomic and molecular assemblies.

Ambiguities in the terms disorder and chaos, which usually have meanings directly opposed to equilibrium, contribute to widespread confusion and hamper comprehension of entropy for most students.

Von Neumann established a rigorous mathematical framework for quantum mechanics with his work Mathematische Grundlagen der Quantenmechanik.

The obtained data allows the user to integrate the equation above, yielding the absolute value of entropy of the substance at the final temperature.

[92][93][94] Jacob Bekenstein and Stephen Hawking have shown that black holes have the maximum possible entropy of any object of equal size.

Recent work has cast some doubt on the heat death hypothesis and the applicability of any simple thermodynamic model to the universe in general.

[102]: 204f [103]: 29–35 Although his work was blemished somewhat by mistakes, a full chapter on the economics of Georgescu-Roegen has approvingly been included in one elementary physics textbook on the historical development of thermodynamics.

[105]: 116 Since the 1990s, leading ecological economist and steady-state theorist Herman Daly – a student of Georgescu-Roegen – has been the economics profession's most influential proponent of the entropy pessimism position.