Formal language

The alphabet of a formal language consists of symbols, letters, or tokens that concatenate into strings called words.

In computational complexity theory, decision problems are typically defined as formal languages, and complexity classes are defined as the sets of the formal languages that can be parsed by machines with limited computational power.

The field of formal language theory studies primarily the purely syntactic aspects of such languages—that is, their internal structural patterns.

In the 17th century, Gottfried Leibniz imagined and described the characteristica universalis, a universal and formal language which utilised pictographs.

[2] Gottlob Frege attempted to realize Leibniz's ideas, through a notational system first outlined in Begriffsschrift (1879) and more fully developed in his 2-volume Grundgesetze der Arithmetik (1893/1903).

The last of these introduced what Emil Post later termed 'Thue Systems', and gave an early example of an undecidable problem.

[5] Post would later use this paper as the basis for a 1947 proof "that the word problem for semigroups was recursively insoluble",[6] and later devised the canonical system for the creation of formal languages.

He published "Sobre un sistema de notaciones y símbolos destinados a facilitar la descripción de las máquinas" ("On a system of notations and symbols intended to facilitate the description of machines").

[7] Heinz Zemanek rated it as an equivalent to a programming language for the numerical control of machine tools.

[9] In 1959 John Backus developed the Backus-Naur form to describe the syntax of a high level programming language, following his work in the creation of FORTRAN.

An alphabet, in the context of formal languages, can be any set; its elements are called letters.

In computer science and mathematics, which do not usually deal with natural languages, the adjective "formal" is often omitted as redundant.

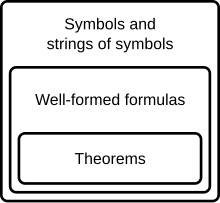

The notion of a formal grammar may be closer to the intuitive concept of a "language", one described by syntactic rules.

However, even over a finite (non-empty) alphabet such as Σ = {a, b} there are an infinite number of finite-length words that can potentially be expressed: "a", "abb", "ababba", "aaababbbbaab", ....

Formal languages may be classified in the Chomsky hierarchy based on the expressive power of their generative grammar as well as the complexity of their recognizing automaton.

A lexical analyzer, sometimes generated by a tool like lex, identifies the tokens of the programming language grammar, e.g. identifiers or keywords, numeric and string literals, punctuation and operator symbols, which are themselves specified by a simpler formal language, usually by means of regular expressions.

At the most basic conceptual level, a parser, sometimes generated by a parser generator like yacc, attempts to decide if the source program is syntactically valid, that is if it is well formed with respect to the programming language grammar for which the compiler was built.

Of course, compilers do more than just parse the source code – they usually translate it into some executable format.

Because of this, a parser usually outputs more than a yes/no answer, typically an abstract syntax tree.