Generative pre-trained transformer

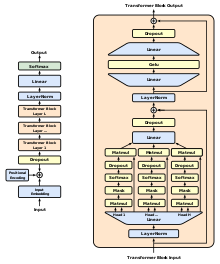

[6] It is based on the transformer deep learning architecture, pre-trained on large data sets of unlabeled text, and able to generate novel human-like content.

[13] Companies in different industries have developed task-specific GPTs in their respective fields, such as Salesforce's "EinsteinGPT" (for CRM)[14] and Bloomberg's "BloombergGPT" (for finance).

[26] During the 2010s, the problem of machine translation was solved[citation needed] by recurrent neural networks, with attention mechanism added.

[30] Prior to transformer-based architectures, the best-performing neural NLP (natural language processing) models commonly employed supervised learning from large amounts of manually-labeled data.

The reliance on supervised learning limited their use on datasets that were not well-annotated, and also made it prohibitively expensive and time-consuming to train extremely large language models.

[citation needed] In July 2021, OpenAI published Codex, a task-specific GPT model targeted for programming applications.

[31] In March 2022, OpenAI published two versions of GPT-3 that were fine-tuned for instruction-following (instruction-tuned), named davinci-instruct-beta (175B) and text-davinci-001,[32] and then started beta testing code-davinci-002.

It can be accessed directly by users via a premium version of ChatGPT, and is available to developers for incorporation into other products and services via OpenAI's API.

The most recent from that is GPT-4, for which OpenAI declined to publish the size or training details (citing "the competitive landscape and the safety implications of large-scale models").

[52] A foundational GPT model can be further adapted to produce more targeted systems directed to specific tasks and/or subject-matter domains.

[54][55] Advantages this had over the bare foundational models included higher accuracy, less negative/toxic sentiment, and generally better alignment with user needs.

In November 2022, OpenAI launched ChatGPT—an online chat interface powered by an instruction-tuned language model trained in a similar fashion to InstructGPT.

[61] Yet another kind of task that a GPT can be used for is the meta-task of generating its own instructions, like developing a series of prompts for 'itself' to be able to effectuate a more general goal given by a human user.

[62] This is known as an AI agent, and more specifically a recursive one because it uses results from its previous self-instructions to help it form its subsequent prompts; the first major example of this was Auto-GPT (which uses OpenAI's GPT models), and others have since been developed as well.

[64] Also, advances in text-to-speech technology offer tools for audio content creation when used in conjunction with foundational GPT language models.

[79] In May 2023, OpenAI engaged a brand management service to notify its API customers of this policy, although these notifications stopped short of making overt legal claims (such as allegations of trademark infringement or demands to cease and desist).

[3][89][90][91] In any event, to whatever extent exclusive rights in the term may occur the U.S., others would need to avoid using it for similar products or services in ways likely to cause confusion.