Gaussian process

In probability theory and statistics, a Gaussian process is a stochastic process (a collection of random variables indexed by time or space), such that every finite collection of those random variables has a multivariate normal distribution.

Gaussian processes are useful in statistical modelling, benefiting from properties inherited from the normal distribution.

While exact models often scale poorly as the amount of data increases, multiple approximation methods have been developed which often retain good accuracy while drastically reducing computation time.

A key fact of Gaussian processes is that they can be completely defined by their second-order statistics.

Importantly the non-negative definiteness of this function enables its spectral decomposition using the Karhunen–Loève expansion.

Basic aspects that can be defined through the covariance function are the process' stationarity, isotropy, smoothness and periodicity.

is to provide maximum a posteriori (MAP) estimates of it with some chosen prior.

[7] This approach is also known as maximum likelihood II, evidence maximization, or empirical Bayes.

[17]: 424 Sufficiency was announced by Xavier Fernique in 1964, but the first proof was published by Richard M. Dudley in 1967.

An example found by Marcus and Shepp [18]: 387 is a random lacunary Fourier series

Driscoll's zero-one law is a result characterizing the sample functions generated by a Gaussian process:

As such, almost all sample paths of a mean-zero Gaussian process with positive definite kernel

Consider e.g. the case where the output of the Gaussian process corresponds to a magnetic field; here, the real magnetic field is bound by Maxwell's equations and a way to incorporate this constraint into the Gaussian process formalism would be desirable as this would likely improve the accuracy of the algorithm.

A method on how to incorporate linear constraints into Gaussian processes already exists:[21] Consider the (vector valued) output function

Hence, linear constraints can be encoded into the mean and covariance function of a Gaussian process.

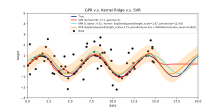

A Gaussian process can be used as a prior probability distribution over functions in Bayesian inference.

[7][23] Given any set of N points in the desired domain of your functions, take a multivariate Gaussian whose covariance matrix parameter is the Gram matrix of your N points with some desired kernel, and sample from that Gaussian.

For solution of the multi-output prediction problem, Gaussian process regression for vector-valued function was developed.

In this method, a 'big' covariance is constructed, which describes the correlations between all the input and output variables taken in N points in the desired domain.

[26] Gaussian processes are thus useful as a powerful non-linear multivariate interpolation tool.

Kriging is also used to extend Gaussian process in the case of mixed integer inputs.

Gaussian processes can also be used in the context of mixture of experts models, for example.

[28][29] The underlying rationale of such a learning framework consists in the assumption that a given mapping cannot be well captured by a single Gaussian process model.

In the natural sciences, Gaussian processes have found use as probabilistic models of astronomical time series and as predictors of molecular properties.

is just one sample from a multivariate Gaussian distribution of dimension equal to number of observed coordinates

A known bottleneck in Gaussian process prediction is that the computational complexity of inference and likelihood evaluation is cubic in the number of points |x|, and as such can become unfeasible for larger data sets.

As layer width grows large, many Bayesian neural networks reduce to a Gaussian process with a closed form compositional kernel.

[7][36][37] It allows predictions from Bayesian neural networks to be more efficiently evaluated, and provides an analytic tool to understand deep learning models.

In practical applications, Gaussian process models are often evaluated on a grid leading to multivariate normal distributions.

Using these models for prediction or parameter estimation using maximum likelihood requires evaluating a multivariate Gaussian density, which involves calculating the determinant and the inverse of the covariance matrix.