Gesture recognition

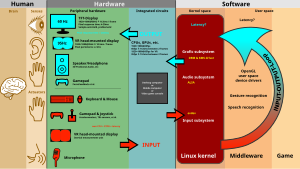

[1] Gesture recognition offers a path for computers to begin to better understand and interpret human body language, previously not possible through text or unenhanced graphical (GUI) user interfaces.

[10][11][12] Pen computing expands digital gesture recognition beyond traditional input devices such as keyboards and mice, and reduces the hardware impact of a system.[how?]

A touchless user interface (TUI) is an emerging type of technology wherein a device is controlled via body motion and gestures without touching a keyboard, mouse, or screen.

One type of touchless interface uses the Bluetooth connectivity of a smartphone to activate a company's visitor management system.

[citation needed] Examples of KUIs include tangible user interfaces and motion-aware games such as Wii and Microsoft's Kinect, and other interactive projects.

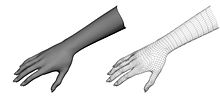

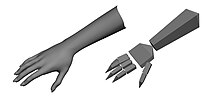

[31] The foremost method makes use of 3D information on key elements of the body parts in order to obtain several important parameters, like palm position or joint angles.

Approaches derived from it such as the volumetric models have proven to be very intensive in terms of computational power and require further technological developments in order to be implemented for real-time analysis.

For the moment, a more interesting approach would be to map simple primitive objects to the person's most important body parts (for example cylinders for the arms and neck, sphere for the head) and analyze the way these interact with each other.

Through classification of data received from the arm muscles, it is possible to classify the action and thus input the gesture to external software.

Due to this, EMG has an advantage over visual methods since the user does not need to face a camera to give input, enabling more freedom of movement.

While gestures can facilitate fast and accurate input on many novel form-factor computers, their adoption and usefulness are often limited by social factors rather than technical ones.

Gesture interfaces on mobile and small form-factor devices are often supported by the presence of motion sensors such as inertial measurement units (IMUs).

This can potentially make capturing signals from subtle or low-motion gestures challenging, as they may become difficult to distinguish from natural movements or noise.

Wearable computers typically differ from traditional mobile devices in that their usage and interaction location takes place on the user's body.

In these contexts, gesture interfaces may become preferred over traditional input methods, as their small size renders touch-screens or keyboards less appealing.

[46][47] In order to measure arm fatigue side effect, researchers developed a technique called Consumed Endurance.