Hick's law

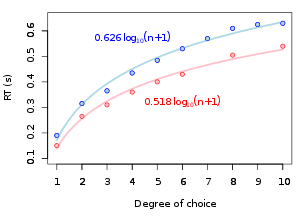

Hick's law, or the Hick–Hyman law, named after British and American psychologists William Edmund Hick and Ray Hyman, describes the time it takes for a person to make a decision as a result of the possible choices: increasing the number of choices will increase the decision time logarithmically.

In 1885, J. Merkel discovered that the response time is longer when a stimulus belongs to a larger set of stimuli.

Although Hicks notes his experimental design using a 4-bit binary recording process was capable of showing up to 15 positions and "all clear", in his experiment he required the device to give an accurate record of reaction time between 10 options after a stimulus for the experiment.

Hyman was responsible for determining a linear relation between reaction time and the information transmitted.

E. Roth (1964) demonstrated a correlation between IQ and information processing speed, which is the reciprocal of the slope of the function:[4] where n is the number of choices.

Studies suggest that the search for a word within a randomly ordered list—in which the reaction time increases linearly according to the number of items—does not allow for the generalization of the scientific law, considering that, in other conditions, the reaction time may not be linearly associated to the logarithm of the number of elements or even show other variations of the basic plane.

[7] The generalization of Hick's law was also tested in studies on the predictability of transitions associated with the reaction time of elements that appeared in a structured sequence.

[8][9] This process was first described as being in accordance to Hick's law,[10] but more recently it was shown that the relationship between predictability and reaction time is sigmoid, not linear associated with different modes of action.

However, if the list is alphabetical and the user knows the name of the command, he or she may be able to use a subdividing strategy that works in logarithmic time.

"Bit" is the unit of log 2 (n).