History of hypertext

In 1934 Belgian bibliographer, Paul Otlet, developed a blueprint for links that telescoped out from hypertext electrically to allow readers to access documents, books, photographs, and so on, stored anywhere in the world.

[citation needed] Later, several scholars entered the scene who believed that humanity was drowning in information, causing foolish decisions and duplicating efforts among scientists.

Paul Otlet proposed a proto-hypertext concept based on his monographic principle, in which all documents would be decomposed down to unique phrases stored on index cards.

But, like the manual index card model, these microfilm devices provided rapid retrieval based on pre-coded indices and classification schemes published as part of the microfilm record without including the link model which distinguishes the modern concept of hypertext from content or category based information retrieval.

He described the device as an electromechanical desk linked to an extensive archive of microfilms, able to display books, writings, or any document from a library.

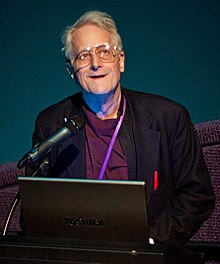

Starting in 1963, Ted Nelson developed a model for creating and using linked content he called "hypertext" and "hypermedia" (first published reference 1965).

[6] Ted Nelson said in the 1960s that he began implementation of a hypertext system he theorized which was named Project Xanadu, but his first and incomplete public release was finished much later, in 1998.

[7] Douglas Engelbart independently began working on his NLS system in 1962 at Stanford Research Institute, although delays in obtaining funding, personnel, and equipment meant that its key features were not completed until 1968.

ZOG started in 1972 as an artificial intelligence research project under the supervision of Allen Newell, and pioneered the "frame" or "card" model of hypertext.

For example, in the late '70s Steve Feiner and others developed an ebook system for Navy repair manuals, and in the early '80s Norm Meyrowitz and a large team at Brown's Institute for Research in Information and Scholarship built Intermedia (mentioned above), which was heavily used in humanities and literary computing.

In '89 van Dam's helped Lou Reynolds and van Dam's former students Steven DeRose and Jeff Vogel spun off Electronic Book Technologies, whose SGML-based hypertext system DynaText was widely used for large online publishing and e-book projects, such as online documentation for Sun, SGI, HP, Novell, and DEC, as well as aerospace, transport, publishing, and other applications.

Brown's Center For Digital Scholarship [1] (née Scholarly Technology Group) was heavily involved in related standards efforts such as the Text Encoding Initiative, Open eBook and XML, as well as enabling a wide variety of humanities hypertext projects.

It provides a single user-interface to large classes of information (reports, notes, data-bases, computer documentation and on-line help).

We propose a simple scheme incorporating servers already available at CERN... A program which provides access to the hypertext world we call a browser... " Tim Berners-Lee, R. Cailliau.