IBM alignment models

[1][2] They underpinned the majority of statistical machine translation systems for almost twenty years starting in the early 1990s, until neural machine translation began to dominate.

These models offer principled probabilistic formulation and (mostly) tractable inference.

[1] Every author of the 1993 paper subsequently went to the hedge fund Renaissance Technologies.

, the probability that we would generate English language sentence

The meaning of an alignment grows increasingly complicated as the model version number grew.

For example, consider the following pair of sentences It will surely rain tomorrow -- 明日 は きっと 雨 だWe can align some English words to corresponding Japanese words, but not everyone:it -> ?

tomorrow -> 明日This in general happens due to the different grammar and conventions of speech in different languages.

Japanese verbs do not have different forms for future and present tense, and the future tense is implied by the noun 明日 (tomorrow).

Future models will allow one English world to be aligned with multiple foreign words.

If a dictionary is not provided at the start, but we have a corpus of English-foreign language pairs

(without alignment information), then the model can be cast into the following form:

In this form, this is exactly the kind of problem solved by expectation–maximization algorithm.

ranges over English-foreign sentence pairs in corpus;

ranges over the entire vocabulary of English words in the corpus;

ranges over the entire vocabulary of foreign words in the corpus.There are several limitations to the IBM model 1.

[7] Model 2 allows alignment to be conditional on sentence lengths.

The fertility is modeled using probability distribution defined as: For each foreign word

This model deals with dropping input words because it allows

This issue generates a special NULL token that can also have its fertility modeled using a conditional distribution defined as: The number of inserted words depends on sentence length.

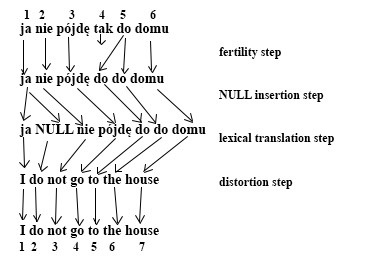

It increases the IBM Model 3 translation process to four steps: The last step is called distortion instead of alignment because it is possible to produce the same translation with the same alignment in different ways.

For example, in the above example, we have another way to get the same alignment:[9] IBM Model 3 can be mathematically expressed as: where

refer to the absolute lengths of the target and source sentences, respectively.

The cept is formed by aligning each input word

[11] Both Model 3 and Model 4 ignore if an input position was chosen and if the probability mass was reserved for the input positions outside the sentence boundaries.

In Model 5 it is important to place words only in free positions.

denotes the number of free positions in the output, the IBM Model 5 distortion probabilities would be defined as:[1] For the initial word in the cept:

The main idea of HMM is to predict the distance between subsequent source language positions.

On the other hand, IBM Model 4 tries to predict the distance between subsequent target language positions.

Since it was expected to achieve better alignment quality when using both types of such dependencies, HMM and Model 4 were combined in a log-linear manner in Model 6 as follows:[13] where the interpolation parameter

values are typically different in terms of their orders of magnitude for HMM and IBM Model 4.