Cache prefetching

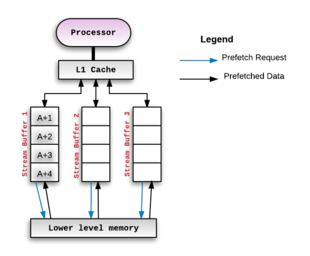

Cache prefetching is a technique used by computer processors to boost execution performance by fetching instructions or data from their original storage in slower memory to a faster local memory before it is actually needed (hence the term 'prefetch').

[1][2] Most modern computer processors have fast and local cache memory in which prefetched data is held until it is required.

[3] This type of prefetching monitors the delta between the addresses of the memory accesses and looks for patterns within it.

In this pattern, consecutive memory accesses are made to blocks that are

In this case, the delta between the addresses of consecutive memory accesses is variable but still follows a pattern.

This class of prefetchers look for memory access streams that repeat over time.

[17][18] Computer applications generate a variety of access patterns.

Hence, the effectiveness and efficiency of prefetching schemes often depend on the application and the architectures used to execute them.

Compiler directed prefetching is widely used within loops with a large number of iterations.

In this technique, the compiler predicts future cache misses and inserts a prefetch instruction based on the miss penalty and execution time of the instructions.

One main advantage of software prefetching is that it reduces the number of compulsory cache misses.

[3] The following example shows how a prefetch instruction would be added into code to improve cache performance.

Consider a for loop as shown below:At each iteration, the ith element of the array "array1" is accessed.

depends on two factors, the cache miss penalty and the time it takes to execute a single iteration of the for loop.

Now, with this arrangement, the first 7 accesses (i=0->6) will still be misses (under the simplifying assumption that each element of array1 is in a separate cache line of its own).

The qualitative definition of timeliness is how early a block is prefetched versus when it is actually referenced.