Reproducibility

Reproducibility serves several critical purposes in science: Verification of Results – Confirms that findings are not due to random chance or errors.

Building Trust in Research – Scientists, policymakers, and the public rely on reproducible studies to make informed decisions.

Publication Bias – Journals tend to publish positive findings rather than null or negative results, leading to an incomplete scientific record.

Complex Experimental Conditions – In some cases, small variations in laboratory settings, equipment, or researcher expertise can affect outcomes, making exact replication difficult.

Medical Research – Reproducibility ensures that clinical trials and drug effectiveness studies produce reliable results before treatments reach the public.

Pharmaceutical Development – Drug discovery relies on reproducing experiments across multiple labs to ensure safety and efficacy.

To enhance reproducibility, researchers and institutions can adopt several best practices: Open Data and Code – Making datasets and computational methods publicly available ensures that others can verify results.

Historians of science Steven Shapin and Simon Schaffer, in their 1985 book Leviathan and the Air-Pump, describe the debate between Boyle and Hobbes, ostensibly over the nature of vacuum, as fundamentally an argument about how useful knowledge should be gained.

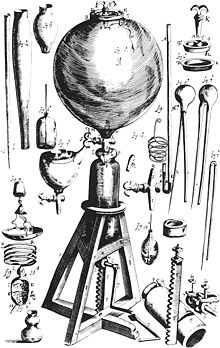

The air pump, which in the 17th century was a complicated and expensive apparatus to build, also led to one of the first documented disputes over the reproducibility of a particular scientific phenomenon.

In the 1660s, the Dutch scientist Christiaan Huygens built his own air pump in Amsterdam, the first one outside the direct management of Boyle and his assistant at the time Robert Hooke.

However, as noted above by Shapin and Schaffer, this dogma is not well-formulated quantitatively, such as statistical significance for instance, and therefore it is not explicitly established how many times must a fact be replicated to be considered reproducible.

Replicability and repeatability are related terms broadly or loosely synonymous with reproducibility (for example, among the general public), but they are often usefully differentiated in more precise senses, as follows.

This requires a detailed description of the methods used to obtain the data[9][10] and making the full dataset and the code to calculate the results easily accessible.

To make any research project computationally reproducible, general practice involves all data and files being clearly separated, labelled, and documented.

Version control should be used as it lets the history of the project be easily reviewed and allows for the documenting and tracking of changes in a transparent manner.

Researchers showed in a 2006 study that, of 141 authors of a publication from the American Psychological Association (APA) empirical articles, 103 (73%) did not respond with their data over a six-month period.

Economic research is often not reproducible as only a portion of journals have adequate disclosure policies for datasets and program code, and even if they do, authors frequently do not comply with them or they are not enforced by the publisher.

[27] A 2018 study published in the journal PLOS ONE found that 14.4% of a sample of public health statistics researchers had shared their data or code or both.

[31] In March 1989, University of Utah chemists Stanley Pons and Martin Fleischmann reported the production of excess heat that could only be explained by a nuclear process ("cold fusion").